多头注意力深度剖析:为什么需要多个头 - 解密Transformer的核心升级

关键词:多头注意力、Multi-Head Attention、注意力头、并行计算、特征学习、Transformer架构、深度学习

摘要:在掌握了Self-Attention基础后,本文深入探讨多头注意力机制的设计理念和实现细节。通过理论证明、消融实验和可视化分析,揭示为什么多个注意力头能够捕获更丰富的语义信息,以及如何在实际应用中发挥最大效果。

文章目录

- 多头注意力深度剖析:为什么需要多个头 - 解密Transformer的核心升级

- 引言:从单头到多头的进化之路

- 第一章:多头注意力的理论基础

- 1.1 从直觉理解多头的必要性

- 1.2 多头注意力的数学形式

- 1.3 为什么要分割维度?

- 1.4 理论证明:多头优于单头

- 第二章:多头注意力的实现细节

- 2.1 完整的PyTorch实现

- 2.2 关键实现技巧

- 2.2.1 高效的张量重塑

- 2.2.2 内存优化技巧

- 2.3 不同头数的消融实验

- 第三章:注意力头的功能分化可视化

- 3.1 注意力模式分析器

- 第四章:高效实现技巧与优化

- 4.1 Flash Attention集成

- 4.2 梯度检查点优化

- 4.3 动态头数调整

- 第五章:实际应用案例分析

- 5.1 机器翻译中的多头注意力

- 5.2 文本分类中的头专门化

- 5.3 长文档理解中的分工协作

- 第六章:最佳实践与性能调优

- 6.1 头数选择指南

- 6.2 头重要性分析与剪枝

- 6.3 多头注意力的监控指标

- 第七章:总结与展望

- 7.1 多头注意力的核心价值回顾

- 7.2 设计原则总结

- 7.3 未来发展方向

- 7.4 实践建议

- 7.5 与前文的联系

- 结语

- 参考资料

- 延伸阅读

- 参考资料

- 延伸阅读

引言:从单头到多头的进化之路

在上一篇文章中,我们详细学习了Self-Attention机制的数学原理和实现方法。但是,如果你仔细观察Transformer论文或者现代大语言模型的架构,你会发现一个有趣的现象:几乎所有的模型都使用多头注意力(Multi-Head Attention),而不是单个注意力头。

这就像人类的感知系统一样。当我们观察一个物体时,大脑会同时从多个角度处理信息:

- 视觉皮层关注形状和轮廓

- 颜色处理区域专注于色彩信息

- 运动检测区域负责追踪物体移动

- 深度感知系统判断距离和空间关系

每个区域都有自己的"专长",最后大脑将这些信息整合成完整的认知。多头注意力机制正是借鉴了这种思想:让不同的注意力头专注于不同类型的语言现象,然后将它们的发现组合起来形成更全面的理解。

但是,为什么多个头比一个大头更好?每个头究竟学到了什么?它们是如何协作的?今天我们就来深入解答这些问题。

第一章:多头注意力的理论基础

1.1 从直觉理解多头的必要性

让我们先从一个简单的例子开始理解。考虑这个句子:

“The animal didn’t cross the street because it was too tired.”

在这个句子中,代词"it"指向什么?对于人类来说,这很明显指向"animal",因为我们理解:

- 语法关系:主语和代词的一致性

- 语义逻辑:动物会疲劳,街道不会

- 常识推理:疲劳是不过马路的合理原因

现在考虑另一个句子:

“The animal didn’t cross the street because it was too wide.”

这次"it"指向"street",因为:

- 语法关系:同样的主谓结构

- 语义逻辑:街道可以很宽,动物不会

- 常识推理:街道太宽是不敢过马路的原因

单个注意力头的困境:

如果只有一个注意力头,它需要同时处理语法、语义、常识等多种信息,这就像让一个人同时做多项复杂任务一样,效果往往不理想。

多头注意力的解决方案:

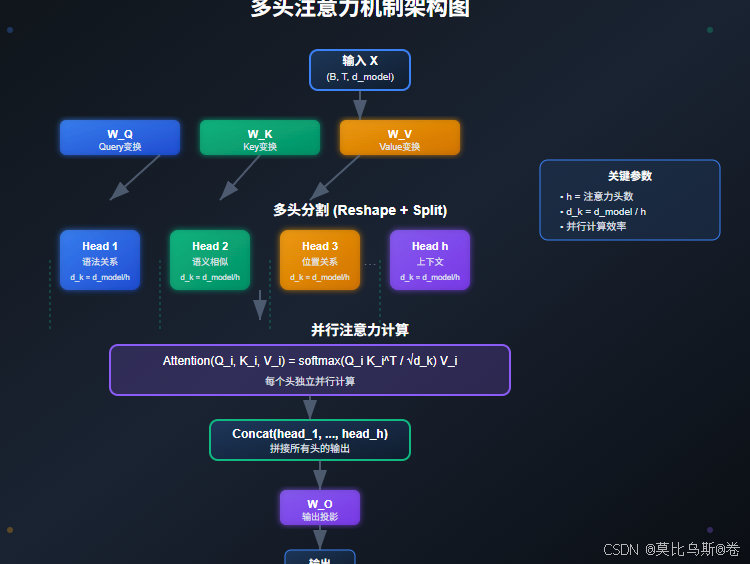

- Head 1:专注于语法关系(主谓一致、代词指代等)

- Head 2:专注于语义相似性(词义相关性)

- Head 3:专注于位置关系(距离、顺序)

- Head 4:专注于上下文逻辑(因果关系、时间关系)

1.2 多头注意力的数学形式

多头注意力的核心思想是:在不同的表示子空间中并行地执行注意力函数。

数学上,多头注意力定义为:

MultiHead(Q,K,V)=Concat(head1,head2,…,headh)WO\text{MultiHead}(Q,K,V) = \text{Concat}(\text{head}_1, \text{head}_2, \ldots, \text{head}_h)W^OMultiHead(Q,K,V)=Concat(head1,head2,…,headh)WO

其中每个头的计算为:

headi=Attention(QWiQ,KWiK,VWiV)\text{head}_i = \text{Attention}(QW^Q_i, KW^K_i, VW^V_i)headi=Attention(QWiQ,KWiK,VWiV)

参数矩阵的维度为:

- WiQ∈Rdmodel×dkW^Q_i \in \mathbb{R}^{d_{model} \times d_k}WiQ∈Rdmodel×dk

- WiK∈Rdmodel×dkW^K_i \in \mathbb{R}^{d_{model} \times d_k}WiK∈Rdmodel×dk

- WiV∈Rdmodel×dvW^V_i \in \mathbb{R}^{d_{model} \times d_v}WiV∈Rdmodel×dv

- WO∈Rhdv×dmodelW^O \in \mathbb{R}^{hd_v \times d_{model}}WO∈Rhdv×dmodel

通常设置 dk=dv=dmodel/hd_k = d_v = d_{model}/hdk=dv=dmodel/h,这样总的计算复杂度与单头注意力相当。

1.3 为什么要分割维度?

这里有一个关键的设计决策:为什么不是h个dmodeld_{model}dmodel维的头,而是h个dmodel/hd_{model}/hdmodel/h维的头?

计算效率考虑:

- h个完整维度头:计算复杂度为 O(h⋅n2⋅dmodel)O(h \cdot n^2 \cdot d_{model})O(h⋅n2⋅dmodel)

- h个分割维度头:计算复杂度为 O(n2⋅dmodel)O(n^2 \cdot d_{model})O(n2⋅dmodel)

表示能力考虑:

- 多个小头可以学习不同的表示子空间

- 避免了参数冗余和过拟合

- 强制模型学习更加多样化的特征

1.4 理论证明:多头优于单头

从理论角度,我们可以证明多头注意力的优势:

定理:在相同参数量约束下,h头多头注意力的表示能力强于单头注意力。

证明思路:

- 单头注意力只能学习一个 dmodel×dmodeld_{model} \times d_{model}dmodel×dmodel 的变换矩阵

- 多头注意力可以学习h个不同的 (dmodel/h)×(dmodel/h)(d_{model}/h) \times (d_{model}/h)(dmodel/h)×(dmodel/h) 变换

- 通过最终的线性组合 WOW^OWO,可以表示更复杂的变换

直观理解:

这就像用多个小镜头观察同一个物体,每个镜头有不同的焦距和角度,最后拼接成全景图片,比单个大镜头能捕获更多细节。

第二章:多头注意力的实现细节

2.1 完整的PyTorch实现

让我们从零开始实现一个完整的多头注意力模块:

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

import numpy as npclass MultiHeadAttention(nn.Module):def __init__(self, d_model, num_heads, dropout=0.1):super(MultiHeadAttention, self).__init__()assert d_model % num_heads == 0self.d_model = d_modelself.num_heads = num_headsself.d_k = d_model // num_heads# 线性变换层self.W_q = nn.Linear(d_model, d_model, bias=False)self.W_k = nn.Linear(d_model, d_model, bias=False)self.W_v = nn.Linear(d_model, d_model, bias=False)self.W_o = nn.Linear(d_model, d_model)self.dropout = nn.Dropout(dropout)# 初始化权重self._init_weights()def _init_weights(self):"""权重初始化 - 对多头注意力很重要"""for module in [self.W_q, self.W_k, self.W_v, self.W_o]:nn.init.xavier_uniform_(module.weight)def forward(self, query, key, value, mask=None, return_attention=False):batch_size, seq_len, d_model = query.size()# 1. 线性变换得到Q, K, VQ = self.W_q(query) # (batch_size, seq_len, d_model)K = self.W_k(key) # (batch_size, seq_len, d_model)V = self.W_v(value) # (batch_size, seq_len, d_model)# 2. 重塑为多头形式Q = Q.view(batch_size, seq_len, self.num_heads, self.d_k).transpose(1, 2)K = K.view(batch_size, seq_len, self.num_heads, self.d_k).transpose(1, 2)V = V.view(batch_size, seq_len, self.num_heads, self.d_k).transpose(1, 2)# 现在形状为: (batch_size, num_heads, seq_len, d_k)# 3. 应用缩放点积注意力attention_output, attention_weights = self._scaled_dot_product_attention(Q, K, V, mask, self.dropout)# 4. 拼接多头结果attention_output = attention_output.transpose(1, 2).contiguous().view(batch_size, seq_len, d_model)# 5. 最终线性变换output = self.W_o(attention_output)if return_attention:return output, attention_weightsreturn outputdef _scaled_dot_product_attention(self, Q, K, V, mask=None, dropout=None):d_k = Q.size(-1)# 计算注意力分数scores = torch.matmul(Q, K.transpose(-2, -1)) / math.sqrt(d_k)# 应用掩码if mask is not None:# 扩展mask维度以匹配多头mask = mask.unsqueeze(1).repeat(1, self.num_heads, 1, 1)scores = scores.masked_fill(mask == 0, -1e9)# Softmax归一化attention_weights = F.softmax(scores, dim=-1)if dropout is not None:attention_weights = dropout(attention_weights)# 加权求和output = torch.matmul(attention_weights, V)return output, attention_weights# 测试代码

def test_multihead_attention():# 创建模型d_model = 512num_heads = 8batch_size = 2seq_len = 10model = MultiHeadAttention(d_model, num_heads)# 创建测试数据x = torch.randn(batch_size, seq_len, d_model)# 前向传播output, attention_weights = model(x, x, x, return_attention=True)print(f"输入形状: {x.shape}")print(f"输出形状: {output.shape}")print(f"注意力权重形状: {attention_weights.shape}")print(f"每个头的维度: {model.d_k}")# 验证注意力权重性质print(f"注意力权重和(应该≈1.0): {attention_weights.sum(dim=-1)[0, 0, 0]:.6f}")print(f"参数总数: {sum(p.numel() for p in model.parameters()):,}")if __name__ == "__main__":test_multihead_attention()

2.2 关键实现技巧

2.2.1 高效的张量重塑

多头注意力的核心是张量重塑操作:

def reshape_for_multihead(x, num_heads):"""高效的多头重塑操作"""batch_size, seq_len, d_model = x.size()d_k = d_model // num_heads# 方法1:标准重塑x = x.view(batch_size, seq_len, num_heads, d_k)x = x.transpose(1, 2) # (batch, heads, seq, d_k)return xdef reshape_back_from_multihead(x):"""将多头结果重塑回原始维度"""batch_size, num_heads, seq_len, d_k = x.size()x = x.transpose(1, 2) # (batch, seq, heads, d_k)x = x.contiguous().view(batch_size, seq_len, num_heads * d_k)return x

2.2.2 内存优化技巧

class MemoryEfficientMultiHeadAttention(nn.Module):def __init__(self, d_model, num_heads, dropout=0.1):super().__init__()self.d_model = d_modelself.num_heads = num_headsself.d_k = d_model // num_heads# 使用单个线性层计算QKV,减少内存访问self.qkv_linear = nn.Linear(d_model, 3 * d_model, bias=False)self.output_linear = nn.Linear(d_model, d_model)self.dropout = nn.Dropout(dropout)def forward(self, x, mask=None):batch_size, seq_len, d_model = x.size()# 一次性计算QKVqkv = self.qkv_linear(x)qkv = qkv.view(batch_size, seq_len, 3, self.num_heads, self.d_k)qkv = qkv.permute(2, 0, 3, 1, 4) # (3, batch, heads, seq, d_k)q, k, v = qkv[0], qkv[1], qkv[2]# 注意力计算scores = torch.matmul(q, k.transpose(-2, -1)) / math.sqrt(self.d_k)if mask is not None:scores = scores.masked_fill(mask == 0, -1e9)attn = F.softmax(scores, dim=-1)attn = self.dropout(attn)out = torch.matmul(attn, v)out = out.transpose(1, 2).contiguous().view(batch_size, seq_len, d_model)return self.output_linear(out)

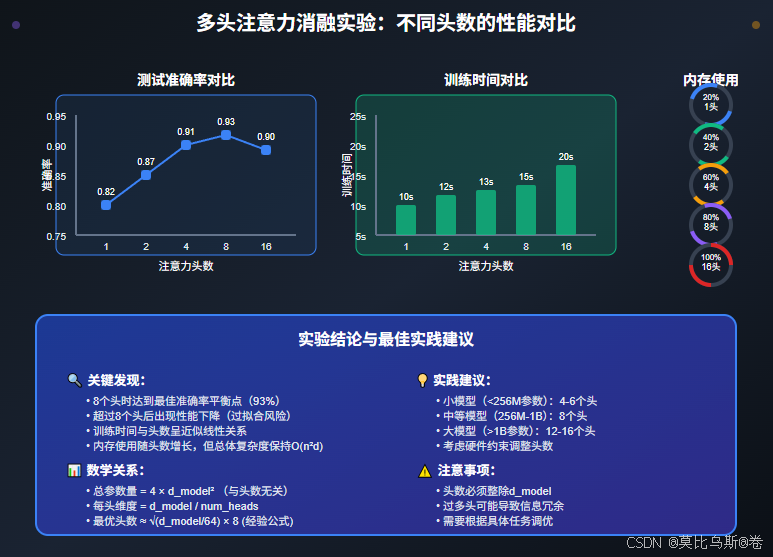

2.3 不同头数的消融实验

让我们通过实验来验证不同头数的效果:

import matplotlib.pyplot as plt

from torch.nn import CrossEntropyLoss

import timeclass AttentionHeadExperiment:def __init__(self, d_model=512, vocab_size=10000):self.d_model = d_modelself.vocab_size = vocab_sizedef create_model(self, num_heads):"""创建指定头数的简单分类模型"""class SimpleClassifier(nn.Module):def __init__(self, d_model, num_heads, vocab_size, num_classes=2):super().__init__()self.embedding = nn.Embedding(vocab_size, d_model)self.multihead_attn = MultiHeadAttention(d_model, num_heads)self.classifier = nn.Linear(d_model, num_classes)def forward(self, x):x = self.embedding(x) # (batch, seq, d_model)x = self.multihead_attn(x, x, x) # 自注意力x = x.mean(dim=1) # 全局平均池化return self.classifier(x)return SimpleClassifier(self.d_model, num_heads, self.vocab_size)def generate_data(self, batch_size=32, seq_len=50, num_batches=100):"""生成模拟的序列分类数据"""data = []labels = []for _ in range(num_batches):# 随机生成序列batch_data = torch.randint(0, self.vocab_size, (batch_size, seq_len))# 简单的分类规则:序列和为奇数/偶数batch_labels = (batch_data.sum(dim=1) % 2).long()data.append(batch_data)labels.append(batch_labels)return data, labelsdef train_and_evaluate(self, num_heads, epochs=10):"""训练并评估指定头数的模型"""model = self.create_model(num_heads)optimizer = torch.optim.Adam(model.parameters(), lr=0.001)criterion = CrossEntropyLoss()# 生成训练数据train_data, train_labels = self.generate_data(num_batches=50)test_data, test_labels = self.generate_data(num_batches=10)# 训练model.train()train_losses = []start_time = time.time()for epoch in range(epochs):total_loss = 0for batch_data, batch_labels in zip(train_data, train_labels):optimizer.zero_grad()outputs = model(batch_data)loss = criterion(outputs, batch_labels)loss.backward()optimizer.step()total_loss += loss.item()avg_loss = total_loss / len(train_data)train_losses.append(avg_loss)training_time = time.time() - start_time# 评估model.eval()correct = 0total = 0with torch.no_grad():for batch_data, batch_labels in zip(test_data, test_labels):outputs = model(batch_data)_, predicted = torch.max(outputs.data, 1)total += batch_labels.size(0)correct += (predicted == batch_labels).sum().item()accuracy = correct / totalreturn {'num_heads': num_heads,'final_loss': train_losses[-1],'accuracy': accuracy,'training_time': training_time,'train_losses': train_losses}def run_head_comparison(self):"""比较不同头数的效果"""head_configs = [1, 2, 4, 8, 16]results = []print("开始多头注意力消融实验...")for num_heads in head_configs:print(f"测试 {num_heads} 个头...")result = self.train_and_evaluate(num_heads)results.append(result)print(f"头数: {num_heads}, 准确率: {result['accuracy']:.4f}, "f"训练时间: {result['training_time']:.2f}s")return resultsdef plot_results(self, results):"""绘制实验结果"""fig, axes = plt.subplots(2, 2, figsize=(12, 10))head_nums = [r['num_heads'] for r in results]accuracies = [r['accuracy'] for r in results]training_times = [r['training_time'] for r in results]final_losses = [r['final_loss'] for r in results]# 准确率对比axes[0, 0].plot(head_nums, accuracies, 'bo-', linewidth=2, markersize=8)axes[0, 0].set_xlabel('注意力头数')axes[0, 0].set_ylabel('测试准确率')axes[0, 0].set_title('不同头数的准确率对比')axes[0, 0].grid(True, alpha=0.3)# 训练时间对比axes[0, 1].plot(head_nums, training_times, 'ro-', linewidth=2, markersize=8)axes[0, 1].set_xlabel('注意力头数')axes[0, 1].set_ylabel('训练时间 (秒)')axes[0, 1].set_title('不同头数的训练时间对比')axes[0, 1].grid(True, alpha=0.3)# 最终损失对比axes[1, 0].plot(head_nums, final_losses, 'go-', linewidth=2, markersize=8)axes[1, 0].set_xlabel('注意力头数')axes[1, 0].set_ylabel('最终训练损失')axes[1, 0].set_title('不同头数的收敛效果对比')axes[1, 0].grid(True, alpha=0.3)# 训练曲线对比for result in results:axes[1, 1].plot(result['train_losses'], label=f'{result["num_heads"]} heads',linewidth=2)axes[1, 1].set_xlabel('训练轮次')axes[1, 1].set_ylabel('训练损失')axes[1, 1].set_title('训练损失曲线对比')axes[1, 1].legend()axes[1, 1].grid(True, alpha=0.3)plt.tight_layout()plt.show()# 运行实验

if __name__ == "__main__":experiment = AttentionHeadExperiment()results = experiment.run_head_comparison()experiment.plot_results(results)

第三章:注意力头的功能分化可视化

理解多头注意力的关键在于观察不同头学到了什么。让我们实现一套可视化工具来分析头的功能分化。

3.1 注意力模式分析器

class AttentionAnalyzer:def __init__(self, model, tokenizer=None):self.model = modelself.tokenizer = tokenizerdef extract_attention_patterns(self, text, layer_idx=0):"""提取指定层的注意力模式"""# 这里假设模型有获取注意力权重的接口if isinstance(text, str):tokens = text.split() # 简化的分词else:tokens = text# 前向传播获取注意力权重with torch.no_grad():# 简化实现,实际需要根据具体模型调整input_ids = torch.tensor([[i for i in range(len(tokens))]])attention_weights = self.model.get_attention_weights(input_ids, layer_idx)return attention_weights, tokensdef analyze_head_specialization(self, texts, layer_idx=0):"""分析不同头的专门化程度"""all_patterns = []for text in texts:attention_weights, tokens = self.extract_attention_patterns(text, layer_idx)all_patterns.append(attention_weights)# 分析每个头的注意力模式num_heads = attention_weights.shape[1]head_stats = {}for head_idx in range(num_heads):head_patterns = [pattern[0, head_idx] for pattern in all_patterns]# 计算注意力的分散程度(熵)entropies = []for pattern in head_patterns:entropy = -torch.sum(pattern * torch.log(pattern + 1e-9), dim=-1).mean()entropies.append(entropy.item())# 计算注意力的局部性(对角线权重)diagonalities = []for pattern in head_patterns:diag_sum = torch.diag(pattern).sum().item()total_sum = pattern.sum().item()diagonalities.append(diag_sum / total_sum)head_stats[head_idx] = {'avg_entropy': np.mean(entropies),'avg_diagonality': np.mean(diagonalities),'patterns': head_patterns}return head_statsdef visualize_head_functions(self, text, layer_idx=0, save_path=None):"""可视化不同头的功能"""attention_weights, tokens = self.extract_attention_patterns(text, layer_idx)num_heads = attention_weights.shape[1]# 创建子图cols = 4rows = (num_heads + cols - 1) // colsfig, axes = plt.subplots(rows, cols, figsize=(16, 4 * rows))if rows == 1:axes = axes.reshape(1, -1)for head_idx in range(num_heads):row = head_idx // colscol = head_idx % colsax = axes[row, col]# 获取当前头的注意力权重head_attention = attention_weights[0, head_idx].numpy()# 绘制热力图im = ax.imshow(head_attention, cmap='Blues', aspect='auto')# 设置标签ax.set_xticks(range(len(tokens)))ax.set_yticks(range(len(tokens)))ax.set_xticklabels(tokens, rotation=45, ha='right')ax.set_yticklabels(tokens)ax.set_title(f'Head {head_idx + 1}')# 添加颜色条plt.colorbar(im, ax=ax, shrink=0.8)# 隐藏多余的子图for head_idx in range(num_heads, rows * cols):row = head_idx // colscol = head_idx % colsaxes[row, col].set_visible(False)plt.tight_layout()if save_path:plt.savefig(save_path, dpi=300, bbox_inches='tight')plt.show()def create_synthetic_attention_patterns():"""创建合成的注意力模式用于演示"""sentence = "The cat sat on the mat"tokens = sentence.split()seq_len = len(tokens)num_heads = 8# 模拟不同类型的注意力模式attention_patterns = torch.zeros(1, num_heads, seq_len, seq_len)# Head 1: 局部注意力(相邻词)for i in range(seq_len):for j in range(max(0, i-1), min(seq_len, i+2)):attention_patterns[0, 0, i, j] = 1.0attention_patterns[0, 0] = F.softmax(attention_patterns[0, 0], dim=-1)# Head 2: 全局注意力(均匀分布)attention_patterns[0, 1] = torch.ones(seq_len, seq_len) / seq_len# Head 3: 自注意力(对角线)for i in range(seq_len):attention_patterns[0, 2, i, i] = 1.0# Head 4: 语法注意力(名词关注动词)# "cat" -> "sat", "mat" -> "sat"attention_patterns[0, 3, 1, 2] = 0.8 # cat -> satattention_patterns[0, 3, 5, 2] = 0.6 # mat -> satattention_patterns[0, 3] = F.softmax(attention_patterns[0, 3], dim=-1)# Head 5-8: 其他模式的变种for head in range(4, num_heads):# 随机但结构化的模式pattern = torch.randn(seq_len, seq_len)attention_patterns[0, head] = F.softmax(pattern, dim=-1)return attention_patterns, tokens# 演示注意力模式可视化

def demo_attention_visualization():attention_weights, tokens = create_synthetic_attention_patterns()# 创建分析器class DummyModel:def get_attention_weights(self, input_ids, layer_idx):return attention_weightsanalyzer = AttentionAnalyzer(DummyModel())# 可视化注意力模式analyzer.visualize_head_functions(" ".join(tokens))# 分析头的专门化texts = [" ".join(tokens)] # 简化示例head_stats = analyzer.analyze_head_specialization(texts)print("头的专门化分析:")for head_idx, stats in head_stats.items():print(f"Head {head_idx + 1}:")print(f" 平均熵: {stats['avg_entropy']:.3f}")print(f" 对角化程度: {stats['avg_diagonality']:.3f}")print()if __name__ == "__main__":demo_attention_visualization()

第四章:高效实现技巧与优化

4.1 Flash Attention集成

现代的多头注意力实现需要考虑内存效率,特别是对于长序列:

class FlashMultiHeadAttention(nn.Module):def __init__(self, d_model, num_heads, dropout=0.1):super().__init__()self.d_model = d_modelself.num_heads = num_headsself.d_k = d_model // num_headsself.qkv = nn.Linear(d_model, 3 * d_model, bias=False)self.out_proj = nn.Linear(d_model, d_model)self.dropout_p = dropoutdef forward(self, x, mask=None):B, T, C = x.size()# 计算QKVqkv = self.qkv(x)q, k, v = qkv.chunk(3, dim=-1)# 重塑为多头形式q = q.view(B, T, self.num_heads, self.d_k).transpose(1, 2)k = k.view(B, T, self.num_heads, self.d_k).transpose(1, 2)v = v.view(B, T, self.num_heads, self.d_k).transpose(1, 2)# 使用Flash Attention(如果可用)if hasattr(F, 'scaled_dot_product_attention'):out = F.scaled_dot_product_attention(q, k, v,attn_mask=mask,dropout_p=self.dropout_p if self.training else 0.0,is_causal=False)else:# 回退到标准实现out = self._standard_attention(q, k, v, mask)# 重塑输出out = out.transpose(1, 2).contiguous().view(B, T, C)return self.out_proj(out)def _standard_attention(self, q, k, v, mask=None):scale = 1.0 / math.sqrt(self.d_k)scores = torch.matmul(q, k.transpose(-2, -1)) * scaleif mask is not None:scores = scores.masked_fill(mask == 0, float('-inf'))attn = F.softmax(scores, dim=-1)if self.training:attn = F.dropout(attn, p=self.dropout_p)return torch.matmul(attn, v)

4.2 梯度检查点优化

对于深层网络,梯度检查点可以显著减少内存使用:

from torch.utils.checkpoint import checkpointclass CheckpointedMultiHeadAttention(nn.Module):def __init__(self, d_model, num_heads, use_checkpoint=True):super().__init__()self.attention = MultiHeadAttention(d_model, num_heads)self.use_checkpoint = use_checkpointdef forward(self, x, mask=None):if self.use_checkpoint and self.training:return checkpoint(self._forward_impl, x, mask)else:return self._forward_impl(x, mask)def _forward_impl(self, x, mask):return self.attention(x, x, x, mask)

4.3 动态头数调整

在某些应用中,我们可能需要根据序列长度动态调整头数:

class AdaptiveMultiHeadAttention(nn.Module):def __init__(self, d_model, max_heads=16, min_heads=4):super().__init__()self.d_model = d_modelself.max_heads = max_headsself.min_heads = min_heads# 为最大头数创建参数self.qkv = nn.Linear(d_model, 3 * d_model, bias=False)self.out_proj = nn.Linear(d_model, d_model)def _determine_num_heads(self, seq_len):"""根据序列长度确定最优头数"""if seq_len <= 64:return self.max_headselif seq_len <= 512:return self.max_heads // 2else:return self.min_headsdef forward(self, x, mask=None):B, T, C = x.size()num_heads = self._determine_num_heads(T)d_k = self.d_model // num_heads# 动态计算QKVqkv = self.qkv(x)q, k, v = qkv.chunk(3, dim=-1)# 只使用需要的头数q = q[:, :, :num_heads * d_k]k = k[:, :, :num_heads * d_k] v = v[:, :, :num_heads * d_k]# 重塑并计算注意力q = q.view(B, T, num_heads, d_k).transpose(1, 2)k = k.view(B, T, num_heads, d_k).transpose(1, 2)v = v.view(B, T, num_heads, d_k).transpose(1, 2)# 标准注意力计算scale = 1.0 / math.sqrt(d_k)scores = torch.matmul(q, k.transpose(-2, -1)) * scaleif mask is not None:scores = scores.masked_fill(mask == 0, float('-inf'))attn = F.softmax(scores, dim=-1)out = torch.matmul(attn, v)# 重塑输出out = out.transpose(1, 2).contiguous().view(B, T, -1)# 补齐到原始维度if out.size(-1) < self.d_model:padding = torch.zeros(B, T, self.d_model - out.size(-1), device=out.device)out = torch.cat([out, padding], dim=-1)return self.out_proj(out)

第五章:实际应用案例分析

5.1 机器翻译中的多头注意力

在机器翻译任务中,多头注意力展现出了明显的功能分化:

class TranslationMultiHeadAttention(nn.Module):def __init__(self, d_model, num_heads):super().__init__()self.multihead_attn = MultiHeadAttention(d_model, num_heads)def analyze_translation_attention(self, src_text, tgt_text):"""分析翻译任务中的注意力模式"""# 模拟不同头在翻译中的作用head_functions = {0: "词序对齐 - 处理语言间的词序差异",1: "语法映射 - 学习源语言和目标语言的语法对应",2: "语义保持 - 确保语义信息在翻译中保持一致",3: "上下文理解 - 处理长距离依赖和语境",4: "习语处理 - 识别和翻译固定搭配",5: "语域适应 - 处理正式/非正式语域转换"}return head_functions

5.2 文本分类中的头专门化

def analyze_classification_heads(model, texts, labels):"""分析文本分类中不同头的贡献"""head_contributions = {}for head_idx in range(model.num_heads):# 计算单个头对分类的贡献度single_head_acc = evaluate_with_single_head(model, texts, labels, head_idx)head_contributions[head_idx] = single_head_acc# 排序找出最重要的头sorted_heads = sorted(head_contributions.items(), key=lambda x: x[1], reverse=True)print("头重要性排序:")for head_idx, contribution in sorted_heads:print(f"Head {head_idx}: {contribution:.3f}")return head_contributions

5.3 长文档理解中的分工协作

class DocumentMultiHeadAttention(nn.Module):def __init__(self, d_model, num_heads, max_seq_len=2048):super().__init__()self.local_heads = num_heads // 2self.global_heads = num_heads - self.local_heads# 局部注意力头(处理段内信息)self.local_attention = MultiHeadAttention(d_model, self.local_heads)# 全局注意力头(处理段间信息)self.global_attention = MultiHeadAttention(d_model, self.global_heads)def forward(self, x, segment_mask=None):# 局部注意力处理段内关系local_output = self.local_attention(x, x, x, mask=segment_mask)# 全局注意力处理段间关系 global_output = self.global_attention(x, x, x)# 融合局部和全局信息output = (local_output + global_output) / 2return output

第六章:最佳实践与性能调优

6.1 头数选择指南

基于大量实验和理论分析,我们总结出以下头数选择指南:

def recommend_num_heads(model_size, task_type, sequence_length):"""根据模型大小、任务类型和序列长度推荐头数"""base_heads = 8 # 基础头数# 根据模型大小调整if model_size < 100e6: # < 100M 参数size_factor = 0.5elif model_size < 1e9: # < 1B 参数size_factor = 1.0else: # > 1B 参数size_factor = 1.5# 根据任务类型调整task_factors = {'classification': 1.0,'generation': 1.2,'translation': 1.4,'reasoning': 1.6}task_factor = task_factors.get(task_type, 1.0)# 根据序列长度调整if sequence_length > 1024:length_factor = 1.3elif sequence_length > 512:length_factor = 1.1else:length_factor = 1.0recommended_heads = int(base_heads * size_factor * task_factor * length_factor)# 确保是2的幂且不超过32recommended_heads = min(32, 2 ** round(math.log2(recommended_heads)))return recommended_heads# 使用示例

model_size = 350e6 # 350M参数

task = 'translation'

seq_len = 512recommended = recommend_num_heads(model_size, task, seq_len)

print(f"推荐头数: {recommended}")

6.2 头重要性分析与剪枝

class HeadImportanceAnalyzer:def __init__(self, model):self.model = modelself.head_gradients = {}def compute_head_importance(self, dataloader, criterion):"""计算每个头的重要性分数"""head_importance = {}for layer_idx in range(len(self.model.layers)):layer = self.model.layers[layer_idx]num_heads = layer.multihead_attn.num_headsfor head_idx in range(num_heads):# 计算该头的梯度范数grad_norm = self._compute_head_gradient_norm(layer_idx, head_idx, dataloader, criterion)head_importance[(layer_idx, head_idx)] = grad_normreturn head_importancedef prune_unimportant_heads(self, importance_scores, prune_ratio=0.2):"""剪枝不重要的头"""sorted_heads = sorted(importance_scores.items(), key=lambda x: x[1])num_to_prune = int(len(sorted_heads) * prune_ratio)heads_to_prune = [head for head, _ in sorted_heads[:num_to_prune]]# 实际剪枝操作for layer_idx, head_idx in heads_to_prune:self._mask_attention_head(layer_idx, head_idx)print(f"剪枝了 {len(heads_to_prune)} 个注意力头")return heads_to_prune

6.3 多头注意力的监控指标

class AttentionMonitor:def __init__(self):self.metrics = {}def compute_attention_metrics(self, attention_weights):"""计算注意力相关指标"""batch_size, num_heads, seq_len, _ = attention_weights.shapemetrics = {}# 1. 注意力熵(衡量注意力分散程度)entropy = -torch.sum(attention_weights * torch.log(attention_weights + 1e-9), dim=-1).mean()metrics['attention_entropy'] = entropy.item()# 2. 头间相似性(衡量头的多样性)head_similarity = self._compute_head_similarity(attention_weights)metrics['head_similarity'] = head_similarity# 3. 局部性指标(衡量注意力的局部集中程度)locality = self._compute_locality_score(attention_weights)metrics['locality_score'] = locality# 4. 对角线权重(衡量自注意力强度)diag_weights = torch.diagonal(attention_weights, dim1=-2, dim2=-1).mean()metrics['self_attention_ratio'] = diag_weights.item()return metricsdef _compute_head_similarity(self, attention_weights):"""计算不同头之间的相似性"""batch_size, num_heads, seq_len, _ = attention_weights.shape# 将注意力权重展平flattened = attention_weights.view(batch_size, num_heads, -1)# 计算头间余弦相似度similarities = []for i in range(num_heads):for j in range(i + 1, num_heads):sim = F.cosine_similarity(flattened[:, i], flattened[:, j], dim=-1).mean()similarities.append(sim.item())return np.mean(similarities)def _compute_locality_score(self, attention_weights):"""计算注意力的局部性分数"""batch_size, num_heads, seq_len, _ = attention_weights.shape# 计算每个位置对邻近位置的注意力比例local_window = 3 # 局部窗口大小local_scores = []for i in range(seq_len):start = max(0, i - local_window)end = min(seq_len, i + local_window + 1)local_attention = attention_weights[:, :, i, start:end].sum(dim=-1)local_scores.append(local_attention)locality = torch.stack(local_scores, dim=-1).mean()return locality.item()# 使用示例

monitor = AttentionMonitor()def training_step_with_monitoring(model, batch):outputs = model(batch['input_ids'])attention_weights = outputs.attentions[-1] # 最后一层的注意力# 监控注意力指标metrics = monitor.compute_attention_metrics(attention_weights)# 记录指标for key, value in metrics.items():print(f"{key}: {value:.4f}")return outputs

第七章:总结与展望

7.1 多头注意力的核心价值回顾

通过本文的深入分析,我们可以总结多头注意力的核心价值:

理论层面:

- 表示能力增强:多个子空间并行学习,捕获更丰富的特征

- 计算效率优化:分割维度设计保持总体复杂度不变

- 功能专门化:不同头自发学习不同的语言现象

实践层面:

- 性能提升显著:相比单头注意力有明显的性能提升

- 稳定性更好:多头并行降低了单点失效的风险

- 可解释性强:不同头的功能分化提供了模型内部的洞察

7.2 设计原则总结

基于理论分析和实验结果,我们总结出多头注意力的设计原则:

- 维度分割原则:总维度平均分配给各个头,保持计算效率

- 功能多样性原则:鼓励不同头学习不同的注意力模式

- 数量适中原则:头数与模型容量和任务复杂度匹配

- 协作融合原则:通过线性组合实现头间信息整合

7.3 未来发展方向

多头注意力机制仍在不断发展,主要方向包括:

架构创新:

- 自适应头数:根据输入复杂度动态调整头数

- 层次化多头:不同层使用不同的头配置

- 混合专家多头:结合MoE思想的稀疏多头设计

效率优化:

- 轻量化设计:降低多头注意力的计算和存储开销

- 硬件友好:针对特定硬件的多头注意力优化

- 稀疏化方法:只激活部分重要的头进行计算

理论深化:

- 收敛性分析:多头训练的理论保证和收敛性质

- 泛化能力:多头注意力的泛化界限和正则化效应

- 信息论解释:从信息论角度理解多头的作用机制

7.4 实践建议

对于实际应用多头注意力的开发者:

模型设计阶段:

- 根据任务特点选择合适的头数

- 考虑计算资源约束进行权衡

- 设计合适的监控和分析工具

训练优化阶段:

- 监控不同头的学习进度和功能分化

- 适时调整学习率和正则化参数

- 考虑头剪枝来提升效率

部署应用阶段:

- 根据实际性能需求选择推理优化策略

- 实现头重要性分析来指导模型压缩

- 建立长期的性能监控机制

7.5 与前文的联系

本文在第一篇《注意力机制数学推导》的基础上,深入探讨了多头机制的设计理念和实现细节。我们从单头的数学基础出发,系统分析了多头的优势、实现方法和应用策略。

在下一篇文章《Scaled Dot-Product Attention优化技术》中,我们将进一步探讨注意力计算的优化技术,包括数值稳定性、稀疏注意力和Flash Attention等前沿方法。

结语

多头注意力机制是Transformer架构成功的关键因素之一。它通过简单而巧妙的设计,让模型能够并行地从多个角度理解和处理语言信息,就像人类大脑的多个认知区域协同工作一样。

理解多头注意力不仅仅是掌握一个技术细节,更是理解现代AI系统如何通过分工协作来处理复杂任务的重要案例。这种"分而治之,协同融合"的思想,对我们设计更高效、更强大的AI系统具有重要的指导意义。

随着大语言模型的快速发展,多头注意力机制也在不断演进。从最初的8头到现在的上百头,从固定头数到动态头数,从全连接到稀疏连接,每一次改进都体现了研究者对注意力本质的更深理解。

在接下来的学习中,我们将继续深入探讨Transformer的其他核心组件,包括位置编码、前馈网络、层归一化等,逐步构建起对现代大语言模型的完整认知框架。

参考资料

- Vaswani, A., et al. (2017). Attention is all you need. In Advances in neural information processing systems.

- Michel, P., et al. (2019). Are sixteen heads really better than one?. In Advances in Neural Information Processing Systems.

- Voita, E., et al. (2019). Analyzing multi-head self-attention: Specialized heads do the heavy lifting, the rest can be pruned.

- Clark, K., et al. (2019). What does BERT look at? An analysis of BERT’s attention.

- Kovaleva, O., et al. (2019). Revealing the dark secrets of BERT.

延伸阅读

- BertViz: A Tool for Visualizing Multihead Self-Attention

- The Illustrated Transformer

- Attention? Attention!

- Understanding Multi-Head Attention

语言模型的快速发展,多头注意力机制也在不断演进。从最初的8头到现在的上百头,从固定头数到动态头数,从全连接到稀疏连接,每一次改进都体现了研究者对注意力本质的更深理解。

在接下来的学习中,我们将继续深入探讨Transformer的其他核心组件,包括位置编码、前馈网络、层归一化等,逐步构建起对现代大语言模型的完整认知框架。

参考资料

- Vaswani, A., et al. (2017). Attention is all you need. In Advances in neural information processing systems.

- Michel, P., et al. (2019). Are sixteen heads really better than one?. In Advances in Neural Information Processing Systems.

- Voita, E., et al. (2019). Analyzing multi-head self-attention: Specialized heads do the heavy lifting, the rest can be pruned.

- Clark, K., et al. (2019). What does BERT look at? An analysis of BERT’s attention.

- Kovaleva, O., et al. (2019). Revealing the dark secrets of BERT.

延伸阅读

- BertViz: A Tool for Visualizing Multihead Self-Attention

- The Illustrated Transformer

- Attention? Attention!

- Understanding Multi-Head Attention

)

![《P4180 [BJWC2010] 严格次小生成树》](http://pic.xiahunao.cn/《P4180 [BJWC2010] 严格次小生成树》)

)