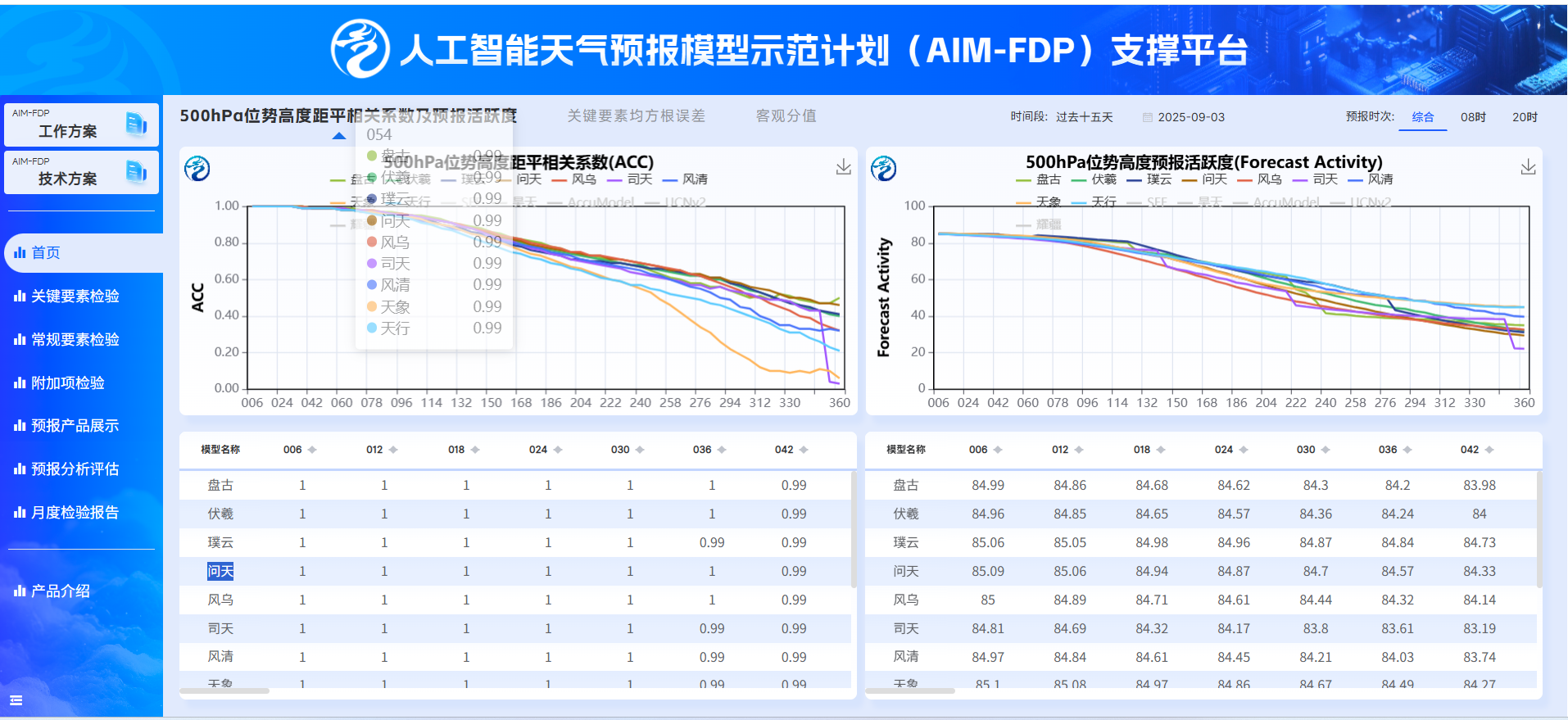

基本思想:在昇腾服务器上迁移github公开链接的的伏羲1.0/2.0大模型,但是由于伏羲2.0模型没有权重,这里使用自己造的的权重进行推理模型测试,在之前迁移过这个网站问海大模型和问天大模型人工智能天气预报模型示范计划AIM-FDP支撑平台之后, 开始动手迁移伏羲大模型

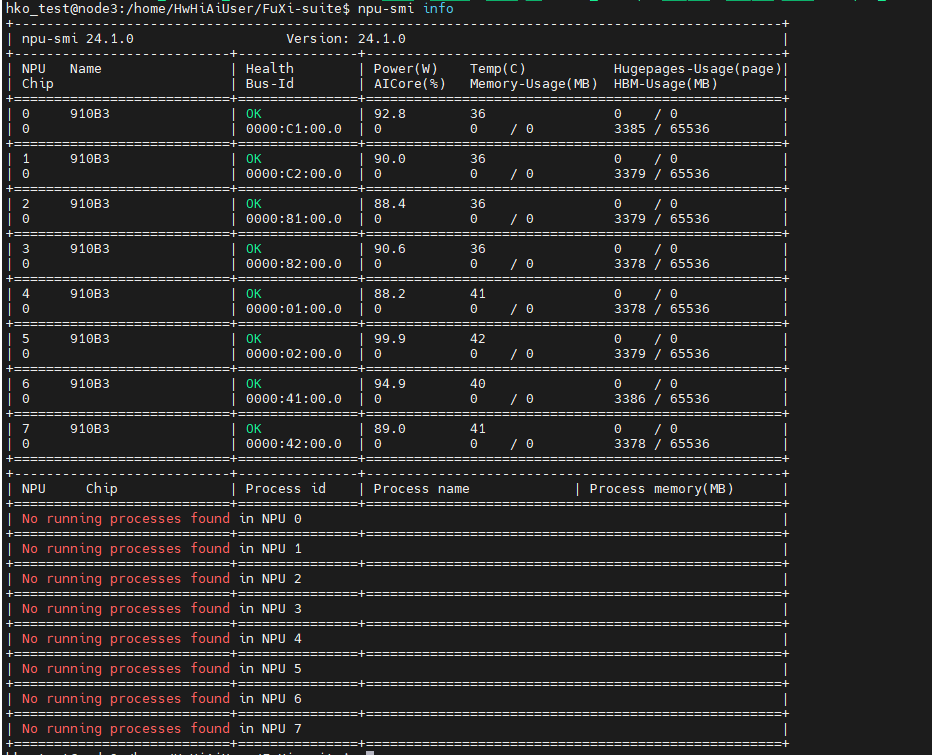

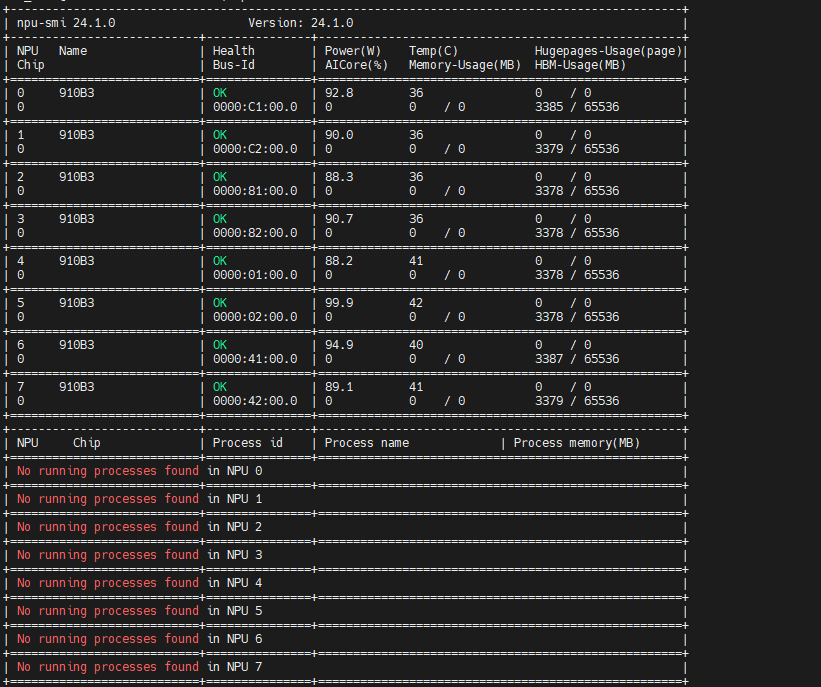

一、首先需要一台昇腾800 I A2服务器,支持固件、驱动已经安装,环境如下

源码链接:GitHub - tpys/FuXi: A cascade machine learning forecasting system for 15-day global weather forecast

二、首先做一个镜像,比较简单,简单记录一下,使用者可以直接进行第三步进行操作

root@2024-12-29-2025-9-28:~# vim /etc/docker/daemon.json

填入内容

{ "insecure-registries": ["https://swr.cn-east-317.qdrgznjszx.com"], "registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"] }

保存退出、然后重启docker即可

root@2024-12-29-2025-9-28:~# systemctl restart docker.serviceroot@2024-12-29-2025-9-28:~# docker pull swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04

20.04: Pulling from sxj731533730/ubuntu

edab87ea811e: Pull complete

Digest: sha256:b2d9e5ff9781680bab26f7d366a6f5ab803df91ef0737343ab2998335a00abe1

Status: Downloaded newer image for swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04

swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04创建容器进入

#!/bin/bash

docker_images=swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04

model_dir=/home/HwHiAiUser #根据实际情况修改挂载目录

docker run -it -d -p 1800:22 --name pycharm --ipc=host \--device=/dev/davinci1 \--device=/dev/davinci2 \--device=/dev/davinci3 \--device=/dev/davinci4 \--device=/dev/davinci5 \--device=/dev/davinci6 \--device=/dev/davinci7 \--device=/dev/davinci8 \--device=/dev/davinci_manager \--device=/dev/devmm_svm \--device=/dev/hisi_hdc \-v /usr/local/dcmi:/usr/local/dcmi \-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \-v /usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/common \-v /usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64/driver \-v /etc/ascend_install.info:/etc/ascend_install.info \-v /etc/vnpu.cfg:/etc/vnpu.cfg \-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \-v ${model_dir}:${model_dir} \-v /var/log/npu:/usr/slog ${docker_images} \/bin/bash创建容器进入配置伏羲大模型的环境,以打包使用者使用

hko_test@node3:~$ sudo vim ppp.sh

[sudo] password for hko_test:

填入上面内容

hko_test@node3:~$ bash ppp.sh

20f9abbd434fbde9403c2951b94322e7598a6185ea086ad4d4217e21a0d92c3b

hko_test@node3:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

20f9abbd434f swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04 "/bin/bash" 3 seconds ago Up 2 seconds 0.0.0.0:1800->22/tcp, [::]:1800->22/tcp pycharm

hko_test@node3:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

20f9abbd434f swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04 "/bin/bash" 9 seconds ago Up 8 seconds 0.0.0.0:1800->22/tcp, [::]:1800->22/tcp pycharm

hko_test@node3:~$ docker start 20f

20f

hko_test@node3:~$ docker exec -it 20f /bin/bash进入容器环境进行配置一个独立的docker环境,方便使用者隔离物理机可以在所有同型号的宿主机直接开箱即用

root@node3:/# apt-get update

root@node3:/#apt-get install cmake gcc g++ cmake make vim git python3-pip python3-dev libopencv-dev ffmpeg ssh openssh-server openssh-client tree

root@node3:/# pip3 install numpy torch==2.4.0 torch_npu torchvision==0.19.0 scikit-learn onnx onnxruntime mnn ncnn pyaml decorator attrs psutil matplotlib argparse xarraynetcdf4 -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple然后修改容器内部的端口号和访问权限,以方便远程调用docker镜像内部的环境,确保服务器开了1800端口

root@node3:/# vim /etc/ssh/sshd_config

root@node3:/#

Port 22

PermitRootLogin yes

PasswordAuthentication yesroot@20f9abbd434f:/# service ssh start* Starting OpenBSD Secure Shell server sshd [ OK ]

root@20f9abbd434f:/# /etc/init.d/ssh restart* Restarting OpenBSD Secure Shell server sshd

设置容器的账号 root 密码我设置了和宿主机密码相同

root@node3:/# passwd root

New password:

Retype new password:

passwd: password updated successfully

下载推理包 ,支持pyhon去推理om模型

tools: Ascend tools - Gitee.com 将下面两个文件拷贝到/home/HwHiAiUser/sxj731533730/ 下

aclruntime-0.0.2-cp38-cp38-linux_aarch64.whl

ais_bench-0.0.2-py3-none-any.whl

root@20f9abbd434f:/# pip3 install /home/HwHiAiUser/sxj731533730/*.whl

Processing /home/HwHiAiUser/sxj731533730/aclruntime-0.0.2-cp38-cp38-linux_aarch64.whl

Processing /home/HwHiAiUser/sxj731533730/ais_bench-0.0.2-py3-none-any.whl

Processing /home/HwHiAiUser/sxj731533730/eccodes_python-0.9.9-py2.py3-none-any.whl

Requirement already satisfied: tqdm in /usr/local/lib/python3.8/dist-packages (from ais-bench==0.0.2) (4.67.1)

Requirement already satisfied: attrs>=21.3.0 in /usr/local/lib/python3.8/dist-packages (from ais-bench==0.0.2) (25.3.0)

Requirement already satisfied: numpy in /usr/local/lib/python3.8/dist-packages (from ais-bench==0.0.2) (1.24.4)

Collecting cffiDownloading cffi-1.17.1-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (448 kB)|████████████████████████████████| 448 kB 367 kB/s

Collecting pycparserDownloading pycparser-2.22-py3-none-any.whl (117 kB)|████████████████████████████████| 117 kB 7.5 MB/s

Installing collected packages: aclruntime, ais-bench, pycparser, cffi, eccodes-python

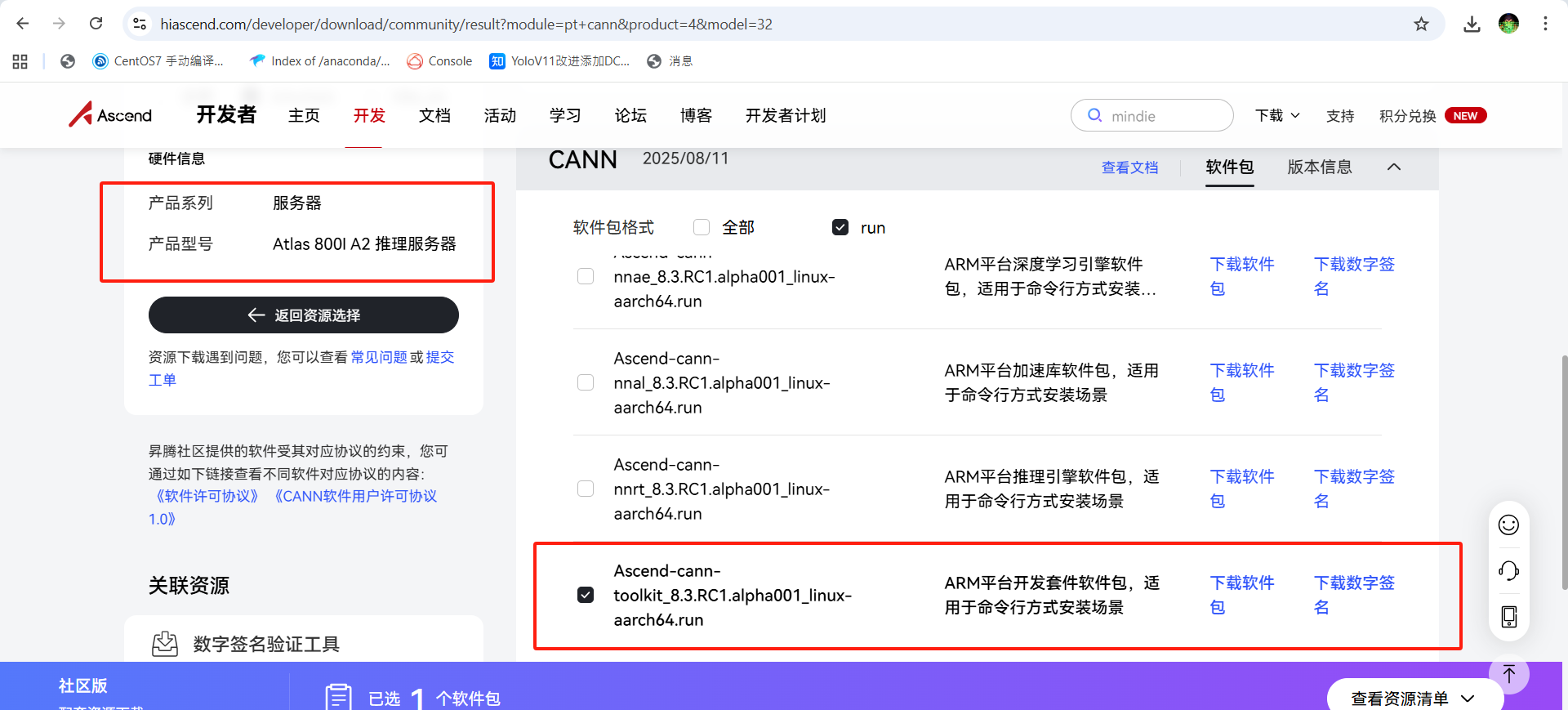

Successfully installed aclruntime-0.0.2 ais-bench-0.0.2 cffi-1.17.1 eccodes-python-0.9.9 pycparser-2.2下载cann包,完成模型转换的工具安装

进行安装

root@20f9abbd434f:/# ./home/HwHiAiUser/Ascend-cann-toolkit_8.0.0_linux-aarch64.run --full --force

root@20f9abbd434f:/# vim ~/.bashrcsource /usr/local/Ascend/ascend-toolkit/set_env.sh

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/Ascend/driver/lib64/driver/

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/Ascend/driver/lib64/common/

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/Ascend/ascend-toolkit/8.3.RC1/atc/lib64/

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/Ascend/ascend-toolkit/8.3.RC1/runtime/lib64/stub/linux/aarch64/root@20f9abbd434f:/# find / -name libascend_hal.so

/usr/local/Ascend/ascend-toolkit/8.0.0/runtime/lib64/stub/linux/aarch64/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/8.0.0/runtime/lib64/stub/linux/x86_64/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/8.0.0/runtime/lib64/stub/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/8.0.0/aarch64-linux/devlib/linux/aarch64/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/8.0.0/aarch64-linux/devlib/linux/x86_64/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/8.0.0/aarch64-linux/devlib/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/devlib/linux/aarch64/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/devlib/linux/x86_64/libascend_hal.so

/usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/devlib/libascend_hal.so

/usr/local/Ascend/driver/lib64/driver/libascend_hal.so

root@20f9abbd434f:/# cp /usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/devlib/libascend_hal.so /usr/lib

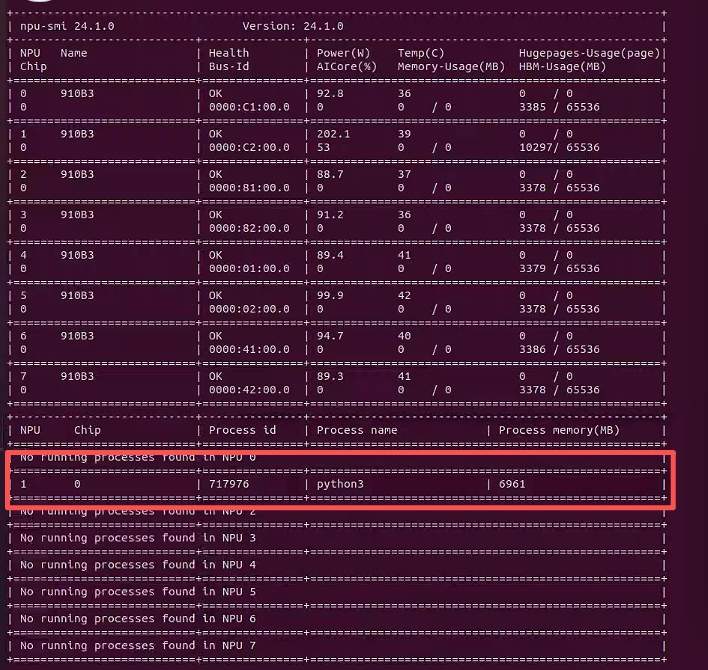

在容器内部可以看到显卡信息

到此环境变量配置完成,镜像的容器可以使用,可以提交一版本

hko_test@node3:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

20f9abbd434f swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04 "/bin/bash" About an hour ago Up About an hour 0.0.0.0:1800->22/tcp, [::]:1800->22/tcp pycharm

hko_test@node3:~$ docker commit 20f9abbd434f ubuntu20.04:v1

sha256:df89b8ddb0cbfdfe1bbcda356317b909a09b4f6c9b1e2898bbe08ddb2fd7182b

三、使用者直接从第三步使用即可, 进入容器,进行转模型进行测试

hko_test@node3:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

20f9abbd434f swr.cn-east-317.qdrgznjszx.com/sxj731533730/ubuntu:20.04 "/bin/bash" About an hour ago Up About an hour 0.0.0.0:1800->22/tcp, [::]:1800->22/tcp pycharm

hko_test@node3:~$ docker start 20f9abbd434f

20f9abbd434f

hko_test@node3:~$ docker exec -it 20f9abbd434f /bin/bash

转一下模型

root@20f9abbd434f:/home/HwHiAiUser/sxj731533730/FuXi-suite/FuXi_EC# atc --model=short.onnx --framework=5 --output=short --input_format=ND --input_shape="input:1,2,70,721,1440;temb:1,12" --log=error --soc_version=Ascend910B3

ATC start working now, please wait for a moment.

.Warning: tiling offset out of range, index: 32

..Warning: tiling offset out of range, index: 32

.Warning: tiling offset out of range, index: 32

......

ATC run success, welcome to the next use.root@20f9abbd434f:/home/HwHiAiUser/sxj731533730/FuXi-suite/FuXi_EC# atc --model=short.onnx --framework=5 --output=short --input_format=ND --input_shape="input:1,2,70,721,1440;temb:1,12" --log=error --soc_version=Ascend910B3

ATC start working now, please wait for a moment.root@20f9abbd434f:/home/HwHiAiUser/sxj731533730/FuXi-suite/FuXi_EC# atc --model=medium.onnx --framework=5 --output=medium --input_format=ND --input_shape="input:1,2,70,721,1440;temb:1,12" --log=error --soc_version=Ascend910B3

ATC start working now, please wait for a moment.

.............

ATC run success, welcome to the next use.

测试cpu服务器结果

测试昇腾npu服务器结果

root@20f9abbd434f:/home/HwHiAiUser/sxj731533730/fuxi1_om# cat fuxi.py

import argparse

import os

import time

import numpy as np

import xarray as xr

import pandas as pd

from ais_bench.infer.interface import InferSessionfrom util import save_likeparser = argparse.ArgumentParser()

parser.add_argument('--model', type=str, default="/home/HwHiAiUser/sxj731533730/FuXi-suite/FuXi_EC",required=False, help="FuXi onnx model dir")

parser.add_argument('--input', type=str, default="/home/HwHiAiUser/sxj731533730/FuXi-suite/Sample_Data/nc/20231012-06_input_netcdf.nc",required=False, help="The input data file, store in netcdf format")

parser.add_argument('--save_dir', type=str, default="")

parser.add_argument('--num_steps', type=int, nargs="+", default=[20])

args = parser.parse_args()def time_encoding(init_time, total_step, freq=6):init_time = np.array([init_time])tembs = []for i in range(total_step):hours = np.array([pd.Timedelta(hours=t*freq) for t in [i-1, i, i+1]])times = init_time[:, None] + hours[None]times = [pd.Period(t, 'H') for t in times.reshape(-1)]times = [(p.day_of_year/366, p.hour/24) for p in times]temb = np.array(times, dtype=np.float32)temb = np.concatenate([np.sin(temb), np.cos(temb)], axis=-1)temb = temb.reshape(1, -1)tembs.append(temb)return np.stack(tembs)def load_model(model_name):session = InferSession(0,model_name)return sessiondef run_inference(model_dir, data, num_steps, save_dir=""):total_step = sum(num_steps)init_time = pd.to_datetime(data.time.values[-1])tembs = time_encoding(init_time, total_step)print(f'init_time: {init_time.strftime(("%Y%m%d-%H"))}')print(f'latitude: {data.lat.values[0]} ~ {data.lat.values[-1]}')assert data.lat.values[0] == 90assert data.lat.values[-1] == -90input = data.values[None]print(f'input: {input.shape}, {input.min():.2f} ~ {input.max():.2f}')print(f'tembs: {tembs.shape}, {tembs.mean():.4f}')stages = ['short', 'medium', 'long']step = 0for i, num_step in enumerate(num_steps):stage = stages[i]start = time.perf_counter()model_name = os.path.join(model_dir, f"{stage}.om")print(f'Load model from {model_name} ...') session = load_model(model_name)load_time = time.perf_counter() - startprint(f'Load model take {load_time:.2f} sec')print(f'Inference {stage} ...')start = time.perf_counter()for _ in range(0, num_step):temb = tembs[step]#new_input, = session.run(None, {'input': input, 'temb': temb})new_input= session.infer([input,temb])[0]#print(new_input)output = new_input[:, -1] save_like(output, data, step, save_dir)print(f'stage: {i}, step: {step+1:02d}, output: {output.min():.2f} {output.max():.2f}')input = new_inputstep += 1run_time = time.perf_counter() - startprint(f'Inference {stage} take {run_time:.2f}')if step > total_step:breakif __name__ == "__main__":data = xr.open_dataarray(args.input)run_inference(args.model, data, args.num_steps, args.save_dir)dd

root@20f9abbd434f:/home/HwHiAiUser/sxj731533730/fuxi1_om# python3 fuxi.py

init_time: 20231012-06

latitude: 90.0 ~ -90.0

input: (1, 2, 70, 721, 1440), -5345.88 ~ 205068.69

tembs: (20, 1, 12), 0.6652

Load model from /home/HwHiAiUser/sxj731533730/FuXi-suite/FuXi_EC/short.om ...

[INFO] acl init success

[INFO] open device 0 success

[INFO] create new context

[INFO] load model /home/HwHiAiUser/sxj731533730/FuXi-suite/FuXi_EC/short.om success

[INFO] create model description success

Load model take 13.06 sec

Inference short ...

stage: 0, step: 01, output: -5168.00 65504.00

stage: 0, step: 02, output: -5064.00 65504.00

stage: 0, step: 03, output: -4944.00 65504.00

stage: 0, step: 04, output: -4736.00 65504.00

stage: 0, step: 05, output: -4568.00 65504.00

stage: 0, step: 06, output: -4248.00 65504.00

stage: 0, step: 07, output: -3862.00 65504.00

stage: 0, step: 08, output: -3296.00 65504.00

stage: 0, step: 09, output: -2812.00 65504.00

stage: 0, step: 10, output: -2316.00 65504.00

stage: 0, step: 11, output: -1898.00 65504.00

stage: 0, step: 12, output: -2706.00 65504.00

stage: 0, step: 13, output: -3418.00 65504.00

stage: 0, step: 14, output: -3546.00 65504.00

stage: 0, step: 15, output: -3458.00 65504.00

stage: 0, step: 16, output: -3172.00 65504.00

stage: 0, step: 17, output: -2686.00 65504.00

stage: 0, step: 18, output: -2136.00 65504.00

stage: 0, step: 19, output: -2434.00 65504.00

stage: 0, step: 20, output: -2968.00 65504.00

Inference short take 18.40

[INFO] unload model success, model Id is 1

[INFO] end to reset device 0

[INFO] end to finalize acl使用显存的大小

ddd

)

![[源力觉醒 创作者计划]_文心一言 4.5开源深度解析:性能狂飙 + 中文专精](http://pic.xiahunao.cn/[源力觉醒 创作者计划]_文心一言 4.5开源深度解析:性能狂飙 + 中文专精)

![[GYCTF2020]Ezsqli](http://pic.xiahunao.cn/[GYCTF2020]Ezsqli)