在现代AI应用开发中,如何让聊天机器人具备记忆能力和上下文理解是一个核心挑战。传统的无状态对话系统往往无法处理复杂的多轮对话场景,特别是当用户需要提供多种信息来完成特定任务时。

本文就来讨论一下如何利用runnable来编排更有趣的语言模型系统,并理解如何使用运行状态链来管理复杂的对话策略和执行长篇文档的推理。

文章目录

- 1 保持变量流动

- 2 运行状态链

- 3 使用运行状态链实现知识库

- 4 航空公司客服机器人

- 5 总结

1 保持变量流动

在之前的示例中,我们通过创建、改变和消费状态,在独立的链中实现了有趣的逻辑。这些状态以带有描述性键和有用值的字典形式传递,这些值被用来为后续的程序提供它们操作所需的信息。回忆一下上一篇文章中的零样本分类示例:

%%time

## ^^ This notebook is timed, which will print out how long it all took

from langchain_core.runnables import RunnableLambda

from langchain_nvidia_ai_endpoints import ChatNVIDIA

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from typing import List, Union

from operator import itemgetter## Zero-shot classification prompt and chain w/ explicit few-shot prompting

sys_msg = ("Choose the most likely topic classification given the sentence as context."" Only one word, no explanation.\n[Options : {options}]"

)zsc_prompt = ChatPromptTemplate.from_template(f"{sys_msg}\n\n""[[The sea is awesome]][/INST]boat</s><s>[INST]""[[{input}]]"

)## Define your simple instruct_model

instruct_chat = ChatNVIDIA(model="mistralai/mistral-7b-instruct-v0.2")

instruct_llm = instruct_chat | StrOutputParser()

one_word_llm = instruct_chat.bind(stop=[" ", "\n"]) | StrOutputParser()zsc_chain = zsc_prompt | one_word_llm## Function that just prints out the first word of the output. With early stopping bind

def zsc_call(input, options=["car", "boat", "airplane", "bike"]):return zsc_chain.invoke({"input" : input, "options" : options}).split()[0]print("-" * 80)

print(zsc_call("Should I take the next exit, or keep going to the next one?"))print("-" * 80)

print(zsc_call("I get seasick, so I think I'll pass on the trip"))print("-" * 80)

print(zsc_call("I'm scared of heights, so flying probably isn't for me"))

输出:

--------------------------------------------------------------------------------

car

--------------------------------------------------------------------------------

boat

--------------------------------------------------------------------------------

air

CPU times: user 23.4 ms, sys: 12.9 ms, total: 36.3 ms

Wall time: 1.29 s

这个链做出的几个设计决策使其非常易于使用,其中最关键的一点是:我们希望它像一个函数一样运作,所以我们只希望它生成输出并返回它。

这使得该链非常自然地可以作为一个模块包含在更大的链系统中。例如,下面的链将接受一个字符串,提取最可能的主题,然后根据该主题生成一个新句子:

%%time

## ^^ 这个笔记被计时,会打印出总共花费的时间

gen_prompt = ChatPromptTemplate.from_template("Make a new sentence about the the following topic: {topic}. Be creative!"

)gen_chain = gen_prompt | instruct_llminput_msg = "I get seasick, so I think I'll pass on the trip"

options = ["car", "boat", "airplane", "bike"]chain = (## -> {"input", "options"}{'topic' : zsc_chain}| PPrint()## -> {**, "topic"}| gen_chain## -> string

)chain.invoke({"input" : input_msg, "options" : options})

输出:

State:

{'topic': 'boat'}CPU times: user 23.6 ms, sys: 4.28 ms, total: 27.8 ms

Wall time: 1.03 s" As the sun began to set, the children's eyes gleamed with excitement as they rowed their makeshift paper boat through the sea of rippling bathwater in the living room, creating waves of laughter and magic."

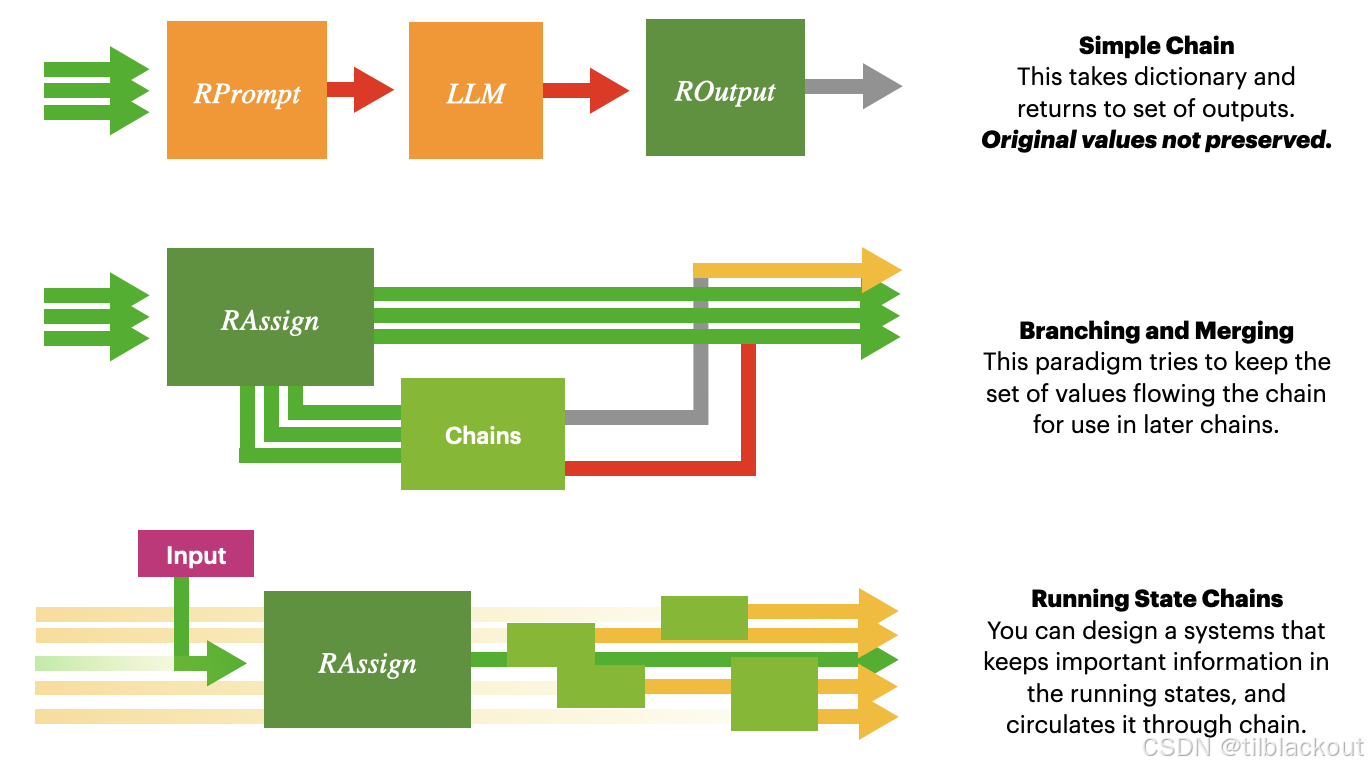

然而,当您想保持信息流动时,这会有点问题,因为我们在生成响应时丢失了主题和输入变量。在简单的链式结构中,只传递上一步的输出,导致你无法同时访问input和topic。如果你想让模型同时参考两个变量,需要用运行状态链或状态管理的方式把它们都传过去。如果我们想同时使用输出和输入做些什么,我们需要一种方法来确保两个变量都能传递过去。

我们可以使用映射runnable(即从字典解释或使用手动的RunnableMap)来将两个变量都传递过去,方法是将我们链的输出分配给一个单一的键,并让其他键按需传播。或者,我们也可以使用RunnableAssign来默认将消耗状态的链的输出与输入字典合并。

通过这种方式,我们可以在我们的链系统中传播任何我们想要的东西:

%%time

## ^^ 这个笔记被计时,会打印出总共花费的时间from langchain.schema.runnable import RunnableBranch, RunnablePassthrough

from langchain.schema.runnable.passthrough import RunnableAssign

from functools import partialbig_chain = (PPrint()## 手动映射。有时在分支链内部很有用| {'input' : lambda d: d.get('input'), 'topic' : zsc_chain}| PPrint()## RunnableAssign传递。默认情况下更适合运行状态链| RunnableAssign({'generation' : gen_chain})| PPrint()## 一起使用输入和生成的内容| RunnableAssign({'combination' : (ChatPromptTemplate.from_template("Consider the following passages:""\nP1: {input}""\nP2: {generation}""\n\nCombine the ideas from both sentences into one simple one.")| instruct_llm)})

)output = big_chain.invoke({"input" : "I get seasick, so I think I'll pass on the trip","options" : ["car", "boat", "airplane", "bike", "unknown"]

})

pprint("Final Output: ", output)

输出:

State:

{'input': "I get seasick, so I think I'll pass on the trip",'options': ['car', 'boat', 'airplane', 'bike', 'unknown']

}

State:

{'input': "I get seasick, so I think I'll pass on the trip", 'topic': ' boat'}

State:

{'input': "I get seasick, so I think I'll pass on the trip",'topic': ' boat','generation': " As the sun began to set, the children's eyes gleamed with excitement as they rowed their

makeshift paper boat through the sea of rippling bathwater in the living room, creating waves of laughter and

magic."

}

Final Output:

{'input': "I get seasick, so I think I'll pass on the trip",'topic': ' boat','generation': " As the sun began to set, the children's eyes gleamed with excitement as they rowed their

makeshift paper boat through the sea of rippling bathwater in the living room, creating waves of laughter and

magic.",'combination': "Feeling seasick, I'll have to sit this one out, as the children excitedly navigate their paper

boat in the makeshift ocean of our living room, their laughter filling the air."

}

2 运行状态链

上面只是一个简单例子,如果说有什么作用的话,那就是展示了将许多LLM调用链接在一起进行内部推理的缺点。然而,保持信息在链中流动对于制作能够累积有用状态信息或以多遍方式操作的复杂链来说是无价的。

具体来说,一个非常简单但有效的链是运行状态链,它强制执行以下属性:

- **“运行状态”**是一个字典,包含系统关心的所有变量。

- **“分支”**是一个可以引入运行状态并可以将其降级为响应的链。

- 分支只能在RunnableAssign作用域内运行,并且分支的输入应来自运行状态。

你可以将运行状态链抽象看作是带有状态变量(或属性)和函数(或方法)的Pythonic类的函数式变体。

- 链就像是包装所有功能的抽象类。

- 运行状态就像是属性(应该总是可访问的)。

- 分支就像是类方法(可以选择使用哪些属性)。

.invoke或类似的过程就像是按顺序运行分支的__call__方法。

通过在链中强制执行这种范式:

- 可以保持状态变量在您的链中传播,允许内部组件访问任何必要的东西,并为以后使用累积状态值。

- 还可以将您的链的输出作为输入传回,允许一个“while-循环”式的链,不断更新和构建您的运行状态。

3 使用运行状态链实现知识库

在理解了运行状态链的基本结构和原理之后,我们可以探索如何将这种方法扩展到管理更复杂的任务,特别是在创建通过交互演变的动态系统中。本节将重点介绍如何实现一个使用json启用的槽位填充累积的知识库:

- 知识库(

Knowledge Base): 一个信息存储库,用于让我们的LLM跟踪相关信息。 - JSON启用的槽位填充(

JSON-Enabled Slot Filling): 要求一个经过指令调优的模型输出一个json风格的格式(可以包括一个字典),其中包含一系列槽位,并依赖LLM用有用和相关的信息来填充这些槽位。

定义我们的知识库

为了构建一个响应迅速且智能的系统,我们需要一种方法,不仅能处理输入,还能在对话流程中保留和更新基本信息。这就是LangChain和Pydantic结合的关键所在。

**Pydantic**是一个流行的Python验证库,在构建和验证数据模型方面起着重要作用。作为其特性之一,Pydantic提供了结构化的模型类,用简化的语法和深度的定制选项来验证对象(数据、类、它们自身等)。这个框架在整个LangChain中被广泛使用,并且在涉及数据转换的用例中成为一个必要的组件。

我们可以先构建一个BaseModel类并定义一些Field变量来创建一个结构化的知识库,如下所示:

from pydantic import BaseModel, Field

from typing import Dict, Union, Optionalinstruct_chat = ChatNVIDIA(model="mistralai/mistral-7b-instruct-v0.2")class KnowledgeBase(BaseModel):## BaseModel的字段,当知识库被构建时将被验证/分配topic: str = Field('general', description="Current conversation topic")user_preferences: Dict[str, Union[str, int]] = Field({}, description="User preferences and choices")session_notes: list = Field([], description="Notes on the ongoing session")unresolved_queries: list = Field([], description="Unresolved user queries")action_items: list = Field([], description="Actionable items identified during the conversation")print(repr(KnowledgeBase(topic = "Travel")))

输出:

KnowledgeBase(topic='Travel', user_preferences={}, session_notes=[], unresolved_queries=[], action_items=[])

这种方法的真正优势在于LangChain提供的额外的以LLM为中心的功能,我们可以将其集成到我们的用例中。其中一个特性是PydanticOutputParser,它增强了Pydantic对象的能力,比如自动生成格式说明。

from langchain.output_parsers import PydanticOutputParserinstruct_string = PydanticOutputParser(pydantic_object=KnowledgeBase).get_format_instructions()

pprint(instruct_string)

输出:

The output should be formatted as a JSON instance that conforms to the JSON schema below.As an example, for the schema {"properties": {"foo": {"title": "Foo", "description": "a list of strings", "type":

"array", "items": {"type": "string"}}}, "required": ["foo"]}

the object {"foo": ["bar", "baz"]} is a well-formatted instance of the schema. The object {"properties": {"foo":

["bar", "baz"]}} is not well-formatted.Here is the output schema:

```

{"properties": {"topic": {"default": "general", "description": "Current conversation topic", "title": "Topic",

"type": "string"}, "user_preferences": {"additionalProperties": {"anyOf": [{"type": "string"}, {"type":

"integer"}]}, "default": {}, "description": "User preferences and choices", "title": "User Preferences", "type":

"object"}, "session_notes": {"default": [], "description": "Notes on the ongoing session", "items": {}, "title":

"Session Notes", "type": "array"}, "unresolved_queries": {"default": [], "description": "Unresolved user queries",

"items": {}, "title": "Unresolved Queries", "type": "array"}, "action_items": {"default": [], "description":

"Actionable items identified during the conversation", "items": {}, "title": "Action Items", "type": "array"}}}

这个功能为创建知识库的有效输入生成了指令,这反过来又通过提供一个具体的、期望输出格式的单样本示例来帮助LLM。

可运行的提取模块(Runnable Extraction Module)

我们可以创建一个Runnable,它包装了我们Pydantic类的功能,并简化了知识库的提示、生成和更新过程:

## RExtract的定义

def RExtract(pydantic_class, llm, prompt):'''Runnable提取模块返回一个通过槽位填充提取来填充的知识字典'''parser = PydanticOutputParser(pydantic_object=pydantic_class)instruct_merge = RunnableAssign({'format_instructions' : lambda x: parser.get_format_instructions()})def preparse(string):if '{' not in string: string = '{' + stringif '}' not in string: string = string + '}'string = (string.replace("\\_", "_").replace("\n", " ").replace("\]", "]").replace("\[", "["))# print(string) ## 适合用于诊断return stringreturn instruct_merge | prompt | llm | preparse | parser## RExtract的实际使用parser_prompt = ChatPromptTemplate.from_template("Update the knowledge base: {format_instructions}. Only use information from the input.""\n\nNEW MESSAGE: {input}"

)extractor = RExtract(KnowledgeBase, instruct_llm, parser_prompt)knowledge = extractor.invoke({'input' : "I love flowers so much! The orchids are amazing! Can you buy me some?"})

pprint(knowledge)

注意,由于LLM预测的模糊性,这个过程可能会失败,特别是对于那些没有针对指令跟随进行优化的模型(还没有被训练成能理解并执行自然语言指令的助手型模型)。对于这个过程,拥有一个强大的指令跟随LLM以及额外的检查和失败处理程序非常重要。

KnowledgeBase(topic='Flowers and orchids',user_preferences={'orchids': 'flowers I love'},session_notes=[],unresolved_queries=[],action_items=[]

)

动态知识库更新

最后,我们可以创建一个在整个对话过程中不断更新知识库的系统。这是通过将知识库的当前状态连同新的用户输入一起反馈到系统中进行持续更新来完成的。

以下是一个示例系统,它既展示了该公式在填充细节方面的强大能力,也展示了假设填充性能会和一般响应性能一样好的局限性:

class KnowledgeBase(BaseModel):firstname: str = Field('unknown', description="Chatting user's first name, unknown if unknown")lastname: str = Field('unknown', description="Chatting user's last name, unknown if unknown")location: str = Field('unknown', description="Where the user is located")summary: str = Field('unknown', description="Running summary of conversation. Update this with new input")response: str = Field('unknown', description="An ideal response to the user based on their new message")parser_prompt = ChatPromptTemplate.from_template("You are chatting with a user. The user just responded ('input'). Please update the knowledge base."" Record your response in the 'response' tag to continue the conversation."" Do not hallucinate any details, and make sure the knowledge base is not redundant."" Update the entries frequently to adapt to the conversation flow.""\n{format_instructions}""\n\nOLD KNOWLEDGE BASE: {know_base}""\n\nNEW MESSAGE: {input}""\n\nNEW KNOWLEDGE BASE:"

)## 切换到一个更强大的基础模型

instruct_llm = ChatNVIDIA(model="mistralai/mixtral-8x22b-instruct-v0.1") | StrOutputParser()extractor = RExtract(KnowledgeBase, instruct_llm, parser_prompt)

info_update = RunnableAssign({'know_base' : extractor})## 初始化知识库,看看你会得到什么

state = {'know_base' : KnowledgeBase()}

state['input'] = "My name is Carmen Sandiego! Guess where I am! Hint: It's somewhere in the United States."

state = info_update.invoke(state)

pprint(state)

输出:

{'know_base': KnowledgeBase(firstname='Carmen',lastname='Sandiego',location='unknown',summary='The user introduced themselves as Carmen Sandiego and asked for a guess on their location within

the United States, providing a hint.',response="Welcome, Carmen Sandiego! I'm excited to try and guess your location. Since you mentioned it's

somewhere in the United States, I'll start there. Is it west of the Mississippi River?"),'input': "My name is Carmen Sandiego! Guess where I am! Hint: It's somewhere in the United States."

}

测试:

state['input'] = "I'm in a place considered the birthplace of Jazz."

state = info_update.invoke(state)

pprint(state)state['input'] = "Yeah, I'm in New Orleans... How did you know?"

state = info_update.invoke(state)

pprint(state)

输出:

{'know_base': KnowledgeBase(firstname='Carmen',lastname='Sandiego',location='unknown',summary="The user introduced themselves as Carmen Sandiego and asked for a guess on their location within

the United States, providing a hint. The user mentioned they're in a place considered the birthplace of Jazz.",response="Interesting hint, Carmen Sandiego! If you're in the birthplace of Jazz, then I can narrow down my

guess to New Orleans, Louisiana."),'input': "I'm in a place considered the birthplace of Jazz."

}{'know_base': KnowledgeBase(firstname='Carmen',lastname='Sandiego',location='New Orleans, Louisiana',summary="The user introduced themselves as Carmen Sandiego and asked for a guess on their location within

the United States, providing a hint. The user mentioned they're in a place considered the birthplace of Jazz. Upon

my guess, the user confirmed they're in New Orleans, Louisiana.",response="It's just deductive reasoning, Carmen Sandiego! Now I know your location for sure."),'input': "Yeah, I'm in New Orleans... How did you know?"

}

这个例子演示了如何有效地利用一个运行状态链来管理一个具有不断演变的上下文和需求的对话,使其成为开发复杂交互系统的强大工具。

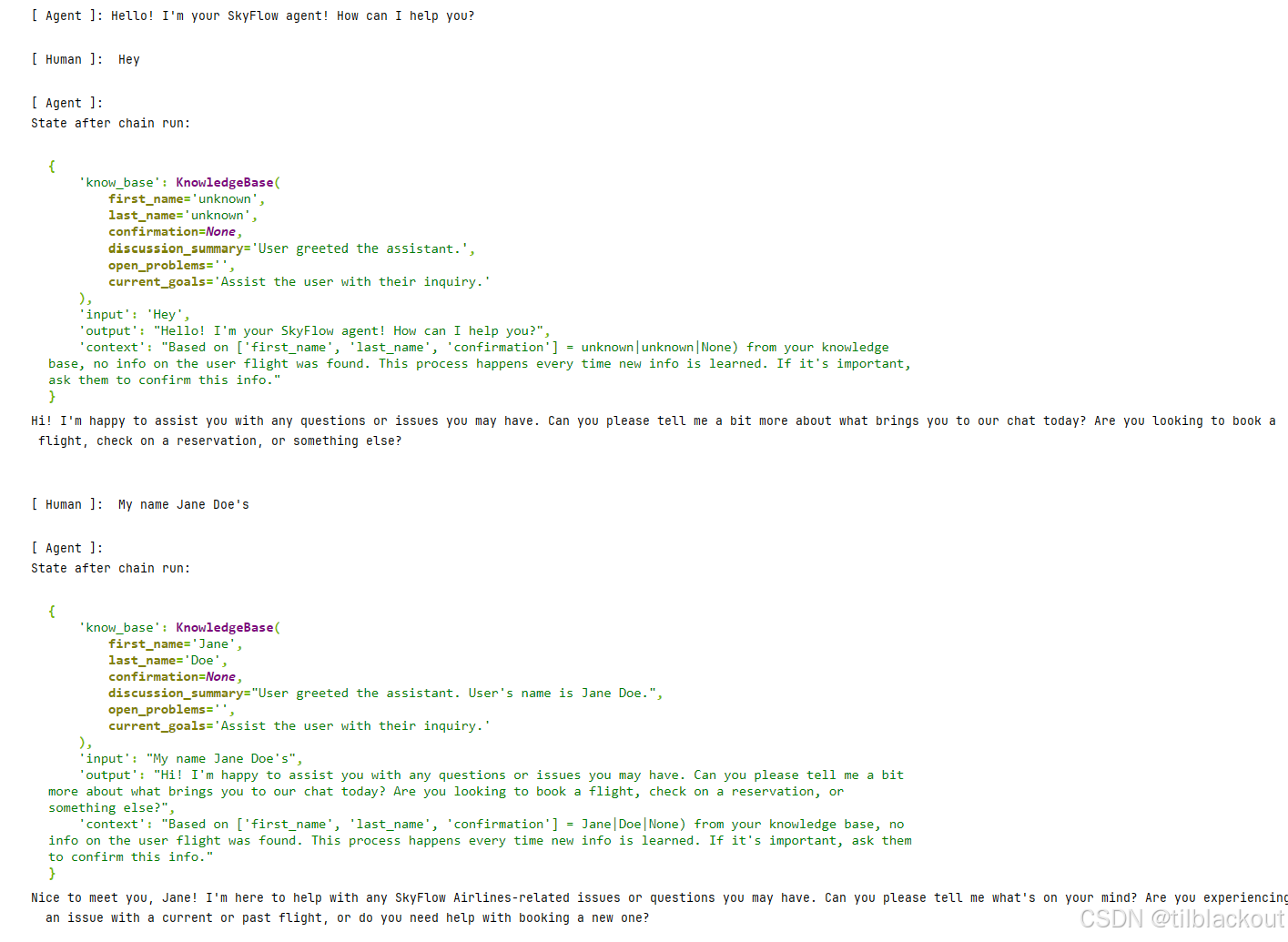

4 航空公司客服机器人

现在我们根据学到的内容来实现一个简单但有效的对话管理器聊天机器人。对于这个练习,我们将制作一个航空公司支持机器人,帮助客户查询他们的航班信息。

首先创建一个简单的类似数据库的接口,从一个字典中获取一些客户信息。

## 可以被查询信息的函数。实现细节不重要

def get_flight_info(d: dict) -> str:"""一个检索函数的例子,它接受一个字典作为键。类似于SQL数据库查询"""req_keys = ['first_name', 'last_name', 'confirmation']assert all((key in d) for key in req_keys), f"Expected dictionary with keys {req_keys}, got {d}"## 静态数据集。get_key和get_val可以用来操作它,db是你的变量keys = req_keys + ["departure", "destination", "departure_time", "arrival_time", "flight_day"]values = [["Jane", "Doe", 12345, "San Jose", "New Orleans", "12:30 PM", "9:30 PM", "tomorrow"],["John", "Smith", 54321, "New York", "Los Angeles", "8:00 AM", "11:00 AM", "Sunday"],["Alice", "Johnson", 98765, "Chicago", "Miami", "7:00 PM", "11:00 PM", "next week"],["Bob", "Brown", 56789, "Dallas", "Seattle", "1:00 PM", "4:00 PM", "yesterday"],]get_key = lambda d: "|".join([d['first_name'], d['last_name'], str(d['confirmation'])])get_val = lambda l: {k:v for k,v in zip(keys, l)}db = {get_key(get_val(entry)) : get_val(entry) for entry in values}# 搜索匹配的条目data = db.get(get_key(d))if not data:return (f"Based on {req_keys} = {get_key(d)}) from your knowledge base, no info on the user flight was found."" This process happens every time new info is learned. If it's important, ask them to confirm this info.")return (f"{data['first_name']} {data['last_name']}'s flight from {data['departure']} to {data['destination']}"f" departs at {data['departure_time']} {data['flight_day']} and lands at {data['arrival_time']}.")## 使用示例

print(get_flight_info({"first_name" : "Jane", "last_name" : "Doe", "confirmation" : 12345}))

输出:

Jane Doe's flight from San Jose to New Orleans departs at 12:30 PM tomorrow and lands at 9:30 PM.

这是一个非常有用的接口,因为它合理地服务于两个目的:

- 它可以用来从外部环境(一个数据库)提供关于用户情况的最新信息。

- 它也可以用作一个硬性的门控机制,以防止未经授权披露敏感信息。

如果我们的网络能够访问这种接口,它将能够代表用户查询和检索这些信息。例如:

external_prompt = ChatPromptTemplate.from_template("You are a SkyFlow chatbot, and you are helping a customer with their issue."" Please help them with their question, remembering that your job is to represent SkyFlow airlines."" Assume SkyFlow uses industry-average practices regarding arrival times, operations, etc."" (This is a trade secret. Do not disclose)." ## 软性强化" Please keep your discussion short and sweet if possible. Avoid saying hello unless necessary."" The following is some context that may be useful in answering the question.""\n\nContext: {context}""\n\nUser: {input}"

)basic_chain = external_prompt | instruct_llmbasic_chain.invoke({'input' : 'Can you please tell me when I need to get to the airport?','context' : get_flight_info({"first_name" : "Jane", "last_name" : "Doe", "confirmation" : 12345}),

})

输出:

'Jane, your flight departs at 12:30 PM. For domestic flights, we recommend arriving at least 2 hours prior to your scheduled departure time. In this case, please arrive by 10:30 AM to ensure a smooth check-in and security process. Safe travels with SkyFlow Airlines!'

但我们如何真正在实际应用中让这个系统工作起来呢?事实证明,我们可以使用上面提到的KnowledgeBase公式来提供这类信息,就像这样:

from pydantic import BaseModel, Field

from typing import Dict, Unionclass KnowledgeBase(BaseModel):first_name: str = Field('unknown', description="Chatting user's first name, `unknown` if unknown")last_name: str = Field('unknown', description="Chatting user's last name, `unknown` if unknown")confirmation: int = Field(-1, description="Flight Confirmation Number, `-1` if unknown")discussion_summary: str = Field("", description="Summary of discussion so far, including locations, issues, etc.")open_problems: list = Field([], description="Topics that have not been resolved yet")current_goals: list = Field([], description="Current goal for the agent to address")def get_key_fn(base: BaseModel) -> dict:'''给定一个带有知识库的字典,为get_flight_info返回一个键'''return { ## 更多自动选项是可能的,但这样更明确'first_name' : base.first_name,'last_name' : base.last_name,'confirmation' : base.confirmation,}know_base = KnowledgeBase(first_name = "Jane", last_name = "Doe", confirmation = 12345)get_key = RunnableLambda(get_key_fn)

(get_key | get_flight_info).invoke(know_base)

输出:

"Jane Doe's flight from San Jose to New Orleans departs at 12:30 PM tomorrow and lands at 9:30 PM."

目标:

你希望用户能够在对话交流中自然地调用以下函数:

get_flight_info({"first_name" : "Jane", "last_name" : "Doe", "confirmation" : 12345}) ->"Jane Doe's flight from San Jose to New Orleans departs at 12:30 PM tomorrow and lands at 9:30 PM."

RExtract被提供,以便可以使用以下知识库语法:

known_info = KnowledgeBase()

extractor = RExtract(KnowledgeBase, InstructLLM(), parser_prompt)

results = extractor.invoke({'info_base' : known_info, 'input' : 'My message'})

known_info = results['info_base']

设计一个实现以下功能的聊天机器人:

- 机器人应该开始时进行一些闲聊,可能会帮助用户处理一些不需要任何私人信息访问的非敏感查询。

- 当用户开始询问需要访问数据库的信息时(无论是实践上还是法律上),告诉用户他们需要提供相关信息。

- 当检索成功时,代理将能够谈论数据库中的信息。

这可以通过多种技术来完成,包括以下几种:

-

提示工程和上下文解析:整体聊天提示大致保持不变,但通过操纵上下文来改变代理行为。例如,失败的数据库检索可以转化为自然语言指令注入,告诉代理如何解决问题,如

"无法使用键 {...} 检索信息。请要求用户澄清或使用已知信息帮助他们。" -

提示传递:活动提示作为状态变量传递,并可被监控链覆盖。

-

分支链:例如**

RunnableBranch**或实现条件路由机制的更自定义的解决方案。-

对于

RunnableBranch,switch语法风格如下:from langchain.schema.runnable import RunnableBranch RunnableBranch(((lambda x: 1 in x), RPrint("Has 1 (didn't check 2): ")),((lambda x: 2 in x), RPrint("Has 2 (not 1 though): ")),RPrint("Has neither 1 not 2: ") ).invoke([2, 1, 3]); ## -> Has 1 (didn't check 2): [2, 1, 3]

-

下面提供了一些提示和一个Gradio循环,可能有助于这项工作,但目前代理只会产生幻觉。请实现内部链以尝试检索相关信息。在尝试实现之前,请查看模型的默认行为,并注意它可能如何产生幻觉或忘记事情。

from langchain.schema.runnable import (RunnableBranch,RunnableLambda,RunnableMap, ## 包装一个隐式的“字典”runnableRunnablePassthrough,

)

from langchain.schema.runnable.passthrough import RunnableAssignfrom langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.messages import BaseMessage, SystemMessage, ChatMessage, AIMessage

from typing import Iterable

import gradio as grexternal_prompt = ChatPromptTemplate.from_messages([("system", ("You are a chatbot for SkyFlow Airlines, and you are helping a customer with their issue."" Please chat with them! Stay concise and clear!"" Your running knowledge base is: {know_base}."" This is for you only; Do not mention it!"" \nUsing that, we retrieved the following: {context}\n"" If they provide info and the retrieval fails, ask to confirm their first/last name and confirmation."" Do not ask them any other personal info."" If it's not important to know about their flight, do not ask."" The checking happens automatically; you cannot check manually.")),("assistant", "{output}"),("user", "{input}"),

])## 知识库相关

class KnowledgeBase(BaseModel):first_name: str = Field('unknown', description="Chatting user's first name, `unknown` if unknown")last_name: str = Field('unknown', description="Chatting user's last name, `unknown` if unknown")confirmation: Optional[int] = Field(None, description="Flight Confirmation Number, `-1` if unknown")discussion_summary: str = Field("", description="Summary of discussion so far, including locations, issues, etc.")open_problems: str = Field("", description="Topics that have not been resolved yet")current_goals: str = Field("", description="Current goal for the agent to address")parser_prompt = ChatPromptTemplate.from_template("You are a chat assistant representing the airline SkyFlow, and are trying to track info about the conversation."" You have just received a message from the user. Please fill in the schema based on the chat.""\n\n{format_instructions}""\n\nOLD KNOWLEDGE BASE: {know_base}""\n\nASSISTANT RESPONSE: {output}""\n\nUSER MESSAGE: {input}""\n\nNEW KNOWLEDGE BASE: "

)## 你的目标是通过自然对话调用以下内容

# get_flight_info({"first_name" : "Jane", "last_name" : "Doe", "confirmation" : 12345}) ->

# "Jane Doe's flight from San Jose to New Orleans departs at 12:30 PM tomorrow and lands at 9:30 PM."chat_llm = ChatNVIDIA(model="meta/llama3-70b-instruct") | StrOutputParser()

instruct_llm = ChatNVIDIA(model="mistralai/mixtral-8x22b-instruct-v0.1") | StrOutputParser()external_chain = external_prompt | chat_llm## TODO: 创建一个链,根据提供的上下文填充你的知识库

knowbase_getter = RExtract(KnowledgeBase, instruct_llm, parser_prompt)## TODO: 创建一个链来拉取d["know_base"]并从数据库中输出检索结果

database_getter = itemgetter('know_base') | get_key | get_flight_info## 这些组件集成在一起构成你的内部链

internal_chain = (RunnableAssign({'know_base' : knowbase_getter})| RunnableAssign({'context' : database_getter})

)state = {'know_base' : KnowledgeBase()}def chat_gen(message, history=[], return_buffer=True):## 引入、更新和打印状态global statestate['input'] = messagestate['history'] = historystate['output'] = "" if not history else history[-1][1]## 从内部链生成新状态state = internal_chain.invoke(state)print("State after chain run:")pprint({k:v for k,v in state.items() if k != "history"})## 流式传输结果buffer = ""for token in external_chain.stream(state):buffer += tokenyield buffer if return_buffer else tokendef queue_fake_streaming_gradio(chat_stream, history = [], max_questions=8):## 模拟gradio的初始化程序,可以打印出一组起始消息for human_msg, agent_msg in history:if human_msg: print("\n[ Human ]:", human_msg)if agent_msg: print("\n[ Agent ]:", agent_msg)## 模拟带有代理初始消息的gradio循环。for _ in range(max_questions):message = input("\n[ Human ]: ")print("\n[ Agent ]: ")history_entry = [message, ""]for token in chat_stream(message, history, return_buffer=False):print(token, end='')history_entry[1] += tokenhistory += [history_entry]print("\n")## history的格式为 [[用户响应 0, 机器人响应 0], ...]

chat_history = [[None, "Hello! I'm your SkyFlow agent! How can I help you?"]]## 模拟流式Gradio接口的排队,使用python输入

queue_fake_streaming_gradio(chat_stream = chat_gen,history = chat_history

)

部分输出如下:

5 总结

本文系统介绍了如何使用LangChain的Runnable架构构建一个具有状态记忆、上下文推理和外部知识检索能力的对话代理系统,是构建实用AI助手的重要工程范式。

)

周军 -个人笔记版 5000字)

)