1、单文件爬取

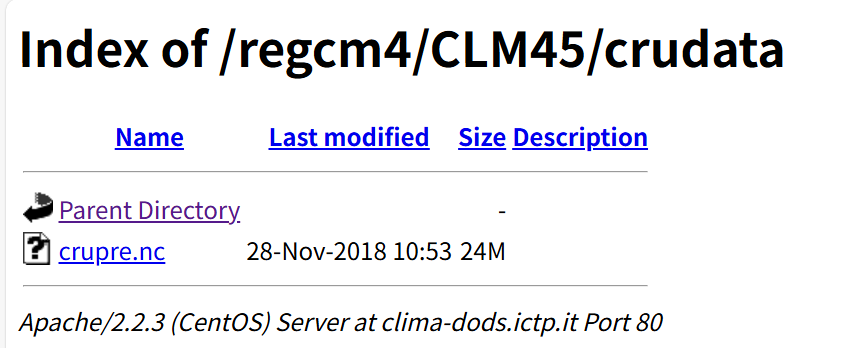

爬取该网站下的crupre.nc数据,如下

使用requests库,然后填写网站的url:"http://clima-dods.ictp.it/regcm4/CLM45/crudata/"

和需要下载的文件名:"crupre.nc"

import requests

import osdef download_file(url, local_filename):os.makedirs(os.path.dirname(local_filename), exist_ok=True)with requests.get(url, stream=True) as r:r.raise_for_status()with open(local_filename, 'wb') as f:for chunk in r.iter_content(chunk_size=8192):f.write(chunk)return local_filenamebase_url = "http://clima-dods.ictp.it/regcm4/CLM45/crudata/"

filename = "crupre.nc"

file_url = base_url + filenamelocal_path = f"./downloads/{filename}"try:print(f"正在下载 {filename}...")download_file(file_url, local_path)print(f"下载完成!文件保存在: {local_path}")

except Exception as e:print(f"下载失败: {e}")2、多文件爬取

如果要多文件同时爬取,那逐个输文件名很不方便。如下,爬取下列网页里的所有nc数据,

此时可使用下列脚本:

此时可使用下列脚本:

import requests

from bs4 import BeautifulSoup

import osdef get_file_links(url):"""获取页面中的所有NC文件链接"""try:response = requests.get(url)soup = BeautifulSoup(response.text, 'html.parser')# 查找所有链接links = []for link in soup.find_all('a'):href = link.get('href')if href and href.endswith('.nc'):links.append(href)return linksexcept Exception as e:print(f"获取文件列表失败: {e}")return []def download_all_nc_files(base_url, download_dir="./downloads"):"""下载所有NC文件"""os.makedirs(download_dir, exist_ok=True)# 获取文件列表files = get_file_links(base_url)if not files:print("未找到NC文件")returnprint(f"找到 {len(files)} 个NC文件")for file in files:file_url = base_url + filelocal_path = os.path.join(download_dir, file)print(f"正在下载 {file}...")try:with requests.get(file_url, stream=True) as r:r.raise_for_status()with open(local_path, 'wb') as f:for chunk in r.iter_content(chunk_size=8192):f.write(chunk)print(f"✓ {file} 下载完成")except Exception as e:print(f"✗ {file} 下载失败: {e}")base_url = "http://clima-dods.ictp.it/regcm4/CLM45/surface/"

download_all_nc_files(base_url)跳过某个文件,下载剩余的文件:

import requests

from bs4 import BeautifulSoup

import osdef get_file_links(url):"""获取页面中的所有NC文件链接"""try:response = requests.get(url)soup = BeautifulSoup(response.text, 'html.parser')# 查找所有链接links = []for link in soup.find_all('a'):href = link.get('href')if href and href.endswith('.nc'):links.append(href)return linksexcept Exception as e:print(f"获取文件列表失败: {e}")return []def download_all_nc_files(base_url, download_dir="./downloads"):"""下载所有NC文件"""os.makedirs(download_dir, exist_ok=True)# 获取文件列表files = get_file_links(base_url)if not files:print("未找到NC文件")returnprint(f"找到 {len(files)} 个NC文件")print('文件名如下:')print(files)# 定义要跳过的文件列表skip_files = ['GlobalLandCover.nc', 'mksrf_urban.nc']for file in files:if file in skip_files:print(f"跳过文件: {file}")continuefile_url = base_url + filelocal_path = os.path.join(download_dir, file)print(f"正在下载 {file}...")try:with requests.get(file_url, stream=True) as r:r.raise_for_status()with open(local_path, 'wb') as f:for chunk in r.iter_content(chunk_size=8192):f.write(chunk)print(f"✓ {file} 下载完成")except Exception as e:print(f"✗ {file} 下载失败: {e}")# 使用示例

base_url = "http://clima-dods.ictp.it/regcm4/CLM45/surface/"

download_all_nc_files(base_url)

上网不稳定解决办法(无法上网)(将宿主机设置固定ip并配置dns))

)

)

)

)

)

)