下面整理了一些图像相似度算法,可根据不同的需求选择不同的算法,对每种算法进行了简单描述并给出Python实现:

1. 基于像素的算法:

(1).MSE(Mean Squared Error):均方误差,通过计算两幅图像对应像素值差的平方和的平均值来衡量图像间的差异。值越小表示两幅图像越相似;差异越大值越高,没有上限;对噪声/光照/几何变换非常敏感;忽略结构信息,不符合人眼感知。

def mse(images1, images2):results = []for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)mse = np.mean((img1.astype(np.float32) - img2.astype(np.float32)) ** 2)results.append((name1.name, name2.name, f"{mse:.2f}"))return results(2).PSNR(Peak Signal-to-Noise Ratio):峰值信噪比,基于MSE的图像质量评估指标,用于衡量图像的失真程度。值越大表示图像越接近(差异越小);常用于图像压缩质量评估;不符合人眼感知,对几何变换敏感。

def psnr(images1, images2):results = []max_pixel = 255.0for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)mse = np.mean((img1.astype(np.float32) - img2.astype(np.float32)) ** 2)if mse == 0:results.append((name1.name, name2.name, float("inf")))else:psnr = 10 * np.log10((max_pixel ** 2) / mse)results.append((name1.name, name2.name, f"{psnr:.2f}"))return results(3).SSIM(Structural Similarity Index Measure):结构相似性指数,是一种感知图像质量评估方法,它不是逐像素比较,而是模拟人眼感知,从亮度、对比度、结构三方面来评估两幅图像的相似度。值范围为[-1, 1],越接近1表示两幅图像越相似;符合人眼感知,对亮度、对比度相对不敏感;对几何变换不鲁棒;参数需要调优。

def ssim(images1, images2):results = []L = 255K1 = 0.01; K2 = 0.03C1 = (K1 * L) ** 2; C2 = (K2 * L) ** 2kernel = (11, 11)sigma = 1.5for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE).astype(np.float32)mu1 = cv2.GaussianBlur(img1, kernel, sigma)mu1_sq = mu1 ** 2sigmal_sq = cv2.GaussianBlur(img1 ** 2, kernel, sigma) - mu1_sqfor name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE).astype(np.float32)mu2 = cv2.GaussianBlur(img2, kernel, sigma)mu2_sq = mu2 ** 2sigmal2_sq = cv2.GaussianBlur(img2 ** 2, kernel, sigma) - mu2_sqmu1_mu2 = mu1 * mu2sigmal2 = cv2.GaussianBlur(img1 * img2, kernel, sigma) - mu1_mu2ssim_map = ((2 * mu1_mu2 + C1) * (2 * sigmal2 + C2)) / \((mu1_sq + mu2_sq + C1) * (sigmal_sq + sigmal2_sq + C2))results.append((name1.name, name2.name, f"{np.mean(ssim_map):.4f}"))return results2. 基于直方图的算法:

(1).颜色直方图:统计图像中不同颜色值的分布情况。对平移、旋转鲁棒(只看颜色分布);对光照变化敏感;对颜色分布相似但结构不同的图像区分能力弱;可用于基于颜色分布的图像检索、图像去重。

def color_histogram(images1, images2):results = []bins = [50, 60]for name1 in images1:img1 = cv2.imread(str(name1))hsv1 = cv2.cvtColor(img1, cv2.COLOR_BGR2HSV)hist1 = cv2.calcHist([hsv1], [0, 1], None, bins, [0, 180, 0, 256])cv2.normalize(hist1, hist1, alpha=0, beta=1, norm_type=cv2.NORM_MINMAX)for name2 in images2:img2 = cv2.imread(str(name2))hsv2 = cv2.cvtColor(img2, cv2.COLOR_BGR2HSV)hist2 = cv2.calcHist([hsv2], [0, 1], None, bins, [0, 180, 0, 256])cv2.normalize(hist2, hist2, alpha=0, beta=1, norm_type=cv2.NORM_MINMAX)score = cv2.compareHist(hist1, hist2, cv2.HISTCMP_CORREL)results.append((name1.name, name2.name, f"{score:.4f}"))return results(2).HOG(Histogram of Oriented Gradients):梯度直方图,能有效描述图像结构特征。对大角度旋转、边缘、纹理敏感;对光照变化鲁棒;参数需要调优;可用于基于形状的图像检索。

def _resize_padding(img, size, color = (0,0,0)):h, w = img.shape[:2]ratio = min(size[0] / w, size[1] / h)neww, newh = int(w*ratio), int(h*ratio)resized = cv2.resize(img, (neww, newh))padw = size[0] - newwpadh = size[1] - newhtop, bottom = padh // 2, padh - padh // 2left, right = padw // 2, padw - padw //2return cv2.copyMakeBorder(resized, top, bottom, left, right, borderType=cv2.BORDER_CONSTANT, value=color)def _cosine_similarity(feature1, feature2):dot = np.dot(feature1, feature2)norm1 = np.linalg.norm(feature1)norm2 = np.linalg.norm(feature2)if norm1 == 0 or norm2 == 0:return 0.0return dot / (norm1 * norm2)def hog(images1, images2):results = []win_size = (488, 488)cell_size = (8, 8)block_size = (16, 16)block_stride = (8, 8)nbins = 9hog = cv2.HOGDescriptor(_winSize=win_size, _blockSize=block_size, _blockStride=block_stride, _cellSize=cell_size, _nbins=nbins)for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)img1 = _resize_padding(img1, win_size)feature1 = hog.compute(img1).flatten()for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)img2 = _resize_padding(img2, win_size)feature2 = hog.compute(img2).flatten()results.append((name1.name, name2.name, f"{_cosine_similarity(feature1, feature2):.4f}"))return results3. 基于特征点的算法:

(1).SIFT(Scale-Invariant Feature Transform):局部特征描述子算法。对尺度、旋转、光照鲁棒;可用于基于内容的图像检索。

def sift(images1, images2):results = []ratio_threshold = 0.75sift = cv2.SIFT_create()bf = cv2.BFMatcher(cv2.NORM_L2, crossCheck=False)for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)kp1, des1 = sift.detectAndCompute(img1, None)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)kp2, des2 = sift.detectAndCompute(img2, None)if des1 is None or des2 is None:results.append((name1.name, name2.name, 0.0))continuetotal_features = min(len(kp1), len(kp2))if total_features == 0:results.append((name1.name, name2.name, 0.0))continuematches = bf.knnMatch(des1, des2, k=2)good_matches = []for match_pair in matches:if len(match_pair) == 2:m, n = match_pairif m.distance < ratio_threshold * n.distance:good_matches.append(m)results.append((name1.name, name2.name, f"{len(good_matches)/total_features:.4f}"))return results(2).SURF(Speeded-Up Robust Features):快速的局部特征描述子算法,是SIFT的加速版本。默认的opencv-contrib-python包未启用nonfree模块,编译OpenCV源码时需开启OPENCV_ENABLE_NONFREE选项。对尺度、旋转、光照鲁棒;可用于基于内容的图像检索、图像去重。

(3).ORB(Oriented FAST and Rotated BRIEF):快速的局部特征描述子算法。对旋转鲁棒;对尺度、光照敏感;计算速度快于SIFT/SURF。

def orb(images1, images2):results = []nfeatures = 1000orb = cv2.ORB_create(nfeatures=nfeatures)bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)kp1, des1 = orb.detectAndCompute(img1, None)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)kp2, des2 = orb.detectAndCompute(img2, None)if des1 is None or des2 is None:results.append((name1.name, name2.name, 0.0))continuematches = bf.match(des1, des2)if len(matches) == 0:results.append((name1.name, name2.name, 0.0))continuegood_matches = sorted(matches, key=lambda x: x.distance)results.append((name1.name, name2.name, f"{len(good_matches)/min(len(kp1), len(kp2)):.4f}"))return results(4).LBP(Local Binary Pattern):纹理特征描述算法。安装scikit-image,直接调用其接口。对光照鲁棒;对尺度、噪声敏感。

def _lbp_histogram(img, P=8, R=1):lbp = local_binary_pattern(img, P, R, method="uniform")hist = cv2.calcHist([lbp.astype(np.float32)], [0], None, [P+2], [0, P+2])hist = cv2.normalize(hist, hist).flatten()return histdef lbp(images1, images2):results = []for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)hist1 = _lbp_histogram(img1)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)hist2 = _lbp_histogram(img2)results.append((name1.name, name2.name, f"{_cosine_similarity(hist1, hist2):.4f}"))return results(5).Template Matching:模板匹配,滑动窗口匹配方法。对尺度、光照敏感。

def template_matching(images1, images2):results = []method = cv2.TM_CCOEFF_NORMEDfor name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)res = cv2.matchTemplate(img2, img1, method)min_val, max_val, _, _ = cv2.minMaxLoc(res)if method in [cv2.TM_SQDIFF, cv2.TM_SQDIFF_NORMED]:results.append((name1.name, name2.name, f"{1-min_val:.4f}"))else:results.append((name1.name, name2.name, f"{max_val:.4f}"))return results(6).GLCM(Gray-Level Co-occurrence Matrix):灰度共生矩阵,提取纹理特征。参数需要调优;对噪声、尺度敏感;对光照相对鲁棒。

def _get_glcm_features(img):distances = [1]angles = [0, np.pi/4, np.pi/2, 3*np.pi/4]features = ["contrast", "correlation", "energy", "homogeneity"]img = cv2.equalizeHist(img)glcm = graycomatrix(img, distances=distances, angles=angles, symmetric=True, normed=True)feat = np.hstack([graycoprops(glcm, f).flatten() for f in features])return featdef glcm(images1, images2):results = []for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)feat1 = _get_glcm_features(img1)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)feat2 = _get_glcm_features(img2)results.append((name1.name, name2.name, f"{_cosine_similarity(feat1, feat2):.4f}"))return results(7).Hausdorff距离:衡量两个点集之间的最大距离。对噪声敏感。

def _get_hausdorff_contours(img):_, binary = cv2.threshold(img, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)contours, _ = cv2.findContours(binary, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)contour = max(contours, key=cv2.contourArea)return contourdef hausdorff(images1, images2):results = []extractor = cv2.createHausdorffDistanceExtractor()for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)contour1 = _get_hausdorff_contours(img1)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)contour2 = _get_hausdorff_contours(img2)results.append((name1.name, name2.name, f"{extractor.computeDistance(contour1, contour2):.4f}"))return results4. 基于频域的算法:

(1).FFT(Fast Fourier Transform):傅里叶变换,通过分析图像在频域的特性来计算相似度。对尺度敏感;对光照鲁棒。

def _get_fft_features(img):f = cv2.dft(np.float32(img), flags=cv2.DFT_COMPLEX_OUTPUT)mag = cv2.magnitude(f[:,:,0], f[:,:,1])mag = np.log1p(mag)mag = cv2.normalize(mag, None, 0, 1, cv2.NORM_MINMAX)return mag.flatten()def fft(images1, images2):results = []for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)v1 = _get_fft_features(img1)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)v2 = _get_fft_features(img2)results.append((name1.name, name2.name, f"{_cosine_similarity(v1, v2):.4f}"))return results(2).Wavelet Transform:小波变换,通过将图像分解为不同频率和空间位置的小波系数来分析图像特征。对噪声鲁棒。需安装pywavelets。

(3).DCT(Discrete Cosine Transform):离散余弦变换,将图像从空间域转换到频域。对几何变换、噪声敏感;对光照鲁棒。

def _get_dct_features(img, low_freq_size):dct = cv2.dct(np.float32(img))dct = dct[:low_freq_size, :low_freq_size]v = dct.flatten()v = (v - np.min(v)) / (np.max(v) - np.min(v) + 1e-8)return vdef dct(images1, images2):results = []low_freq_size = 8for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)v1 = _get_dct_features(img1, low_freq_size)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)v2 = _get_dct_features(img2, low_freq_size)results.append((name1.name, name2.name, f"{_cosine_similarity(v1, v2):.4f}"))return results5. 基于哈希算法:

(1).pHash(Perceptual Hash):感知哈希,将图片转换成哈希值的方法,通过比较哈希值的汉明距离(Hamming Distance)计算相似度。可用于图像去重。

def _get_hash_value(img, low_freq_size):dct = cv2.dct(np.float32(img))dct = dct[:low_freq_size, :low_freq_size]mean = np.mean(dct)value = (dct > mean).astype(np.uint8).flatten()return valuedef _hamming_distance(hash1, hash2):return np.count_nonzero(hash1 != hash2)def phash(images1, images2):results = []hash_size = 32low_freq_size = 8for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_GRAYSCALE)img1 = cv2.resize(img1, (hash_size, hash_size))hash_value1 = _get_hash_value(img1, low_freq_size)for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_GRAYSCALE)img2 = cv2.resize(img2, (hash_size, hash_size))hash_value2 = _get_hash_value(img2, low_freq_size)dist = _hamming_distance(hash_value1, hash_value2)results.append((name1.name, name2.name, f"{1 - dist / hash_value1.size:.4f}"))return results除pHash外还有aHash(Average Hash,均值哈希)、dHash(Difference Hash,差值哈希)等。

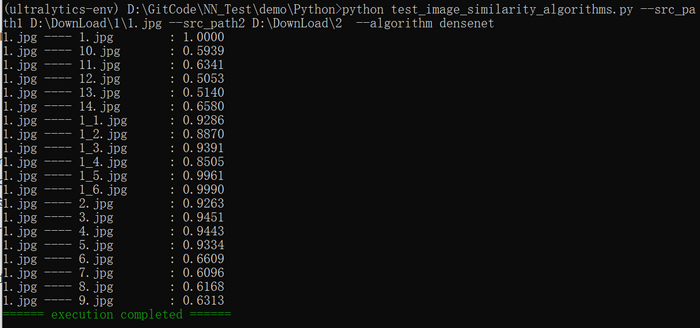

6. 基于深度学习的算法:CNN特征提取,CNN特征比传统手工特征更鲁棒;无需重新训练,直接使用已有的预训练模型即可;提取特征后可使用余弦相似度、欧式距离等计算相似度。以下是使用DenseNet和ResNet预训练模型的代码:

def cnn(images1, images2, net_name):if net_name == "densenet":model = models.densenet121(weights=models.DenseNet121_Weights.DEFAULT) # densenet121-a639ec97.pthelse:model = models.resnet101(weights=models.ResNet101_Weights.DEFAULT) # resnet101-cd907fc2.pthmodel = nn.Sequential(*list(model.children())[:-1])# print(f"model: {model}")model.eval()device = torch.device("cuda" if torch.cuda.is_available() else "cpu")model.to(device)transform = transforms.Compose([transforms.Resize((224, 224)),transforms.ToTensor(),transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])])def _extract_features(name):img = Image.open(name).convert("RGB")tensor = transform(img).unsqueeze(0).to(device) # [1, 3, 224, 224]with torch.no_grad():features = model(tensor) # densenet: [1, 1024, 7, 7]; resnet: [1, 2048, 1, 1]if net_name == "densenet":features = F.adaptive_avg_pool2d(features, (1, 1)) # densenet: [1, 1024, 1, 1]return features.cpu().numpy().flatten()results = []for name1 in images1:features1 = _extract_features(str(name1))for name2 in images2:features2 = _extract_features(str(name2))results.append((name1.name, name2.name, f"{_cosine_similarity(features1, features2):.4f}"))return results其它辅助代码如下所示:

def parse_args():parser = argparse.ArgumentParser(description="summary of image similarity algorithms")parser.add_argument("--algorithm", required=True, type=str, choices=["mse", "psnr", "ssim", "color_histogram", "hog", "sift", "orb", "lbp", "template_matching", "glcm", "hausdorff", "fft", "dct", "phash", "densenet", "resnet"], help="specify what kind of algorithm")parser.add_argument("--src_path1", required=True, type=str, help="source images directory or image name")parser.add_argument("--src_path2", required=True, type=str, help="source images directory or image name")parser.add_argument("--suffix1", type=str, default="jpg", help="image suffix")parser.add_argument("--suffix2", type=str, default="jpg", help="image suffix")args = parser.parse_args()return argsdef _get_images(src_path, suffix):images = []path = Path(src_path)if path.is_dir():images = [img for img in path.rglob("*."+suffix)]else:if path.exists() and path.name.split(".")[1] == suffix:images.append(path)if not images:raise FileNotFoundError(colorama.Fore.RED + f"there are no matching images: src_path:{src_path}; suffix:{suffix}")return imagesdef _check_images(images1, images2):for name1 in images1:img1 = cv2.imread(str(name1), cv2.IMREAD_UNCHANGED)if img1 is None:raise FileNotFoundError(colorama.Fore.RED + f"image not found: {name1}")for name2 in images2:img2 = cv2.imread(str(name2), cv2.IMREAD_UNCHANGED)if img1 is None:raise FileNotFoundError(colorama.Fore.RED + f"image not found: {name2}")if img1.shape != img2.shape:raise ValueError(colorama.Fore.RED + f"images must have the same dimensions: {img1.shape}:{img2.shape}")def _print_results(results):for ret in results:print(f"{ret[0]} ---- {ret[1]}\t: {ret[2]}")if __name__ == "__main__":colorama.init(autoreset=True)args = parse_args()images1 = _get_images(args.src_path1, args.suffix1)images2 = _get_images(args.src_path2, args.suffix2)_check_images(images1, images2)if args.algorithm == "mse": # Mean Squared Errorresults = mse(images1, images2)elif args.algorithm == "psnr": # Peak Signal-to Noise Ratioresults = psnr(images1, images2)elif args.algorithm == "ssim": # Structural Similarity Index Measureresults = ssim(images1, images2)elif args.algorithm == "color_histogram":results = color_histogram(images1, images2)elif args.algorithm == "hog": # Histogram of Oriented Gradientsresults = hog(images1, images2)elif args.algorithm == "sift": # Scale-Invariant Feature Transformresults = sift(images1, images2)elif args.algorithm == "orb": # Oriented FAST and Rotated BRIEFresults = orb(images1, images2)elif args.algorithm == "lbp": # Local Binary Pattenresults = lbp(images1, images2)elif args.algorithm == "template_matching":results = template_matching(images1, images2)elif args.algorithm == "glcm": # Gray-Level Co-occurrence Matrixresults = glcm(images1, images2)elif args.algorithm == "hausdorff": # Hausdorff distanceresults = hausdorff(images1, images2)elif args.algorithm == "fft": # Fast Fourier Transformresults = fft(images1, images2)elif args.algorithm == "dct": # Discrete Cosine Transformresults = dct(images1, images2)elif args.algorithm == "phash": # Perceptual Hashresults = phash(images1, images2)elif args.algorithm == "densenet" or args.algorithm == "resnet":results = cnn(images1, images2, args.algorithm)_print_results(results)print(colorama.Fore.GREEN + "====== execution completed ======")基于DenseNet的执行结果如下图所示:

GitHub:https://github.com/fengbingchun/NN_Test

页表查找)

)